Data Engineers, stop testing in production!

Adrian Brudaru,

Adrian Brudaru,

Co-Founder & CDO

1. Why did we move to ELT, and why is that a problem?

ETL Was Hard to Build – So We Went ELT

A decade ago, building data pipelines meant writing and maintaining Java or Python ETL jobs that ingested, transformed, and loaded data into MySQL, Postgres, or Vertica. It was slow, brittle, and required specialized engineering effort just to keep things running.

When MPP databases like Redshift and Snowflake made SQL performant at scale, ELT flipped the model. Instead of transforming data before loading, teams started dumping raw data into the warehouse and handling transformations in SQL.

This worked because it:

- Shifted ownership from backend engineers to analytics teams.

- Simplified pipeline development, making ingestion a basic copy operation.

- Made iteration cycles faster, you didn’t need a developer for every schema change.

It was the right move for the time. The problem isn’t that ELT was a mistake, it’s that the industry optimised for the wrong part of the workflow.

ELT Is Hard to Maintain – Time to Fix It

ELT made pipelines easy to build. It did not make them easy to operate.

As organisations scaled, data grew more complex, reliability requirements increased, and the hidden cost of ELT became obvious. The issue isn’t ingestion but management.

Right now, this is what ELT operations look like:

- No local development. Transformations run inside the warehouse, which means every test is a production run.

- No pre-load validation. You catch data issues after they break dashboards, not before.

- No fast feedback loop. Simple fixes require full query reruns, turning small changes into expensive operations.

At small scales, these trade-offs were tolerable. But with thousands of pipelines, thousands of transformations, and stricter governance needs, they’re now an operational bottleneck.

Instead of building better systems, engineers are debugging production failures, rerunning queries, and firefighting schema mismatches.

This isn’t a data volume problem. It’s a workflow problem.

ELT Didn’t scale Development practices, and it Breaks at Scale.

Software engineers have staging, CI/CD, and fast iteration cycles.

Data engineers run everything live and hope for the best.

If every pull request in software engineering required a production deployment to test, it’d be a joke. In ELT, that’s just normal.

The warehouse was never built to be a development environment. It’s an analytical backend, and forcing it to handle testing, validation, and iteration is why ELT breaks at scale.

We don’t need more orchestration, more monitoring, or more governance tools. We need a staging layer for data transformations.

2. The problem: ELT maintenance doesn’t scale

ELT was supposed to make data faster, cheaper, and easier to scale. Instead, it’s become a constant operational burden.

Why? Because ELT is vendor locked to online.

At a small scale, the workflow is manageable. When you have a few dozen transformations, you can afford to debug issues directly in the warehouse, rerun failed queries, and patch things manually.

At scale, that approach collapses.

- No isolation: Every change runs in production.

- No visibility: Pipelines fail unpredictably, and debugging means scanning logs or running expensive queries.

- No safety net: The only way to “test” is to rerun transformations on real data.

This isn’t just annoying, it’s expensive. Engineering teams spend more time maintaining pipelines than building new ones. Instead of focusing on optimising architectures, improving data quality, or designing better models, they’re stuck troubleshooting why a dbt run failed at step 92 of 103.

The root issue? Data teams don’t have a development environment.

In software, bad deployments get caught before they reach production.

In ELT, bad transformations get deployed first, then engineers clean up the mess.

We’ve Scaled Storage. We’ve Scaled Compute. But We Never Scaled Development.

The industry threw money at the wrong problem. Instead of fixing the workflow, we added:

- More observability to track failures (but not prevent them).

- More orchestration to retry jobs (but not validate inputs).

- More governance to define rules (but no way to enforce them before loading data).

These are all reactive solutions. They don’t change the fact that data teams are testing in production.

Software engineering has CI/CD pipelines, isolated test environments, and instant feedback loops.

Data engineering has dbt runs that lock the warehouse for 20 minutes and cost $500 per iteration.

This isn’t sustainable.

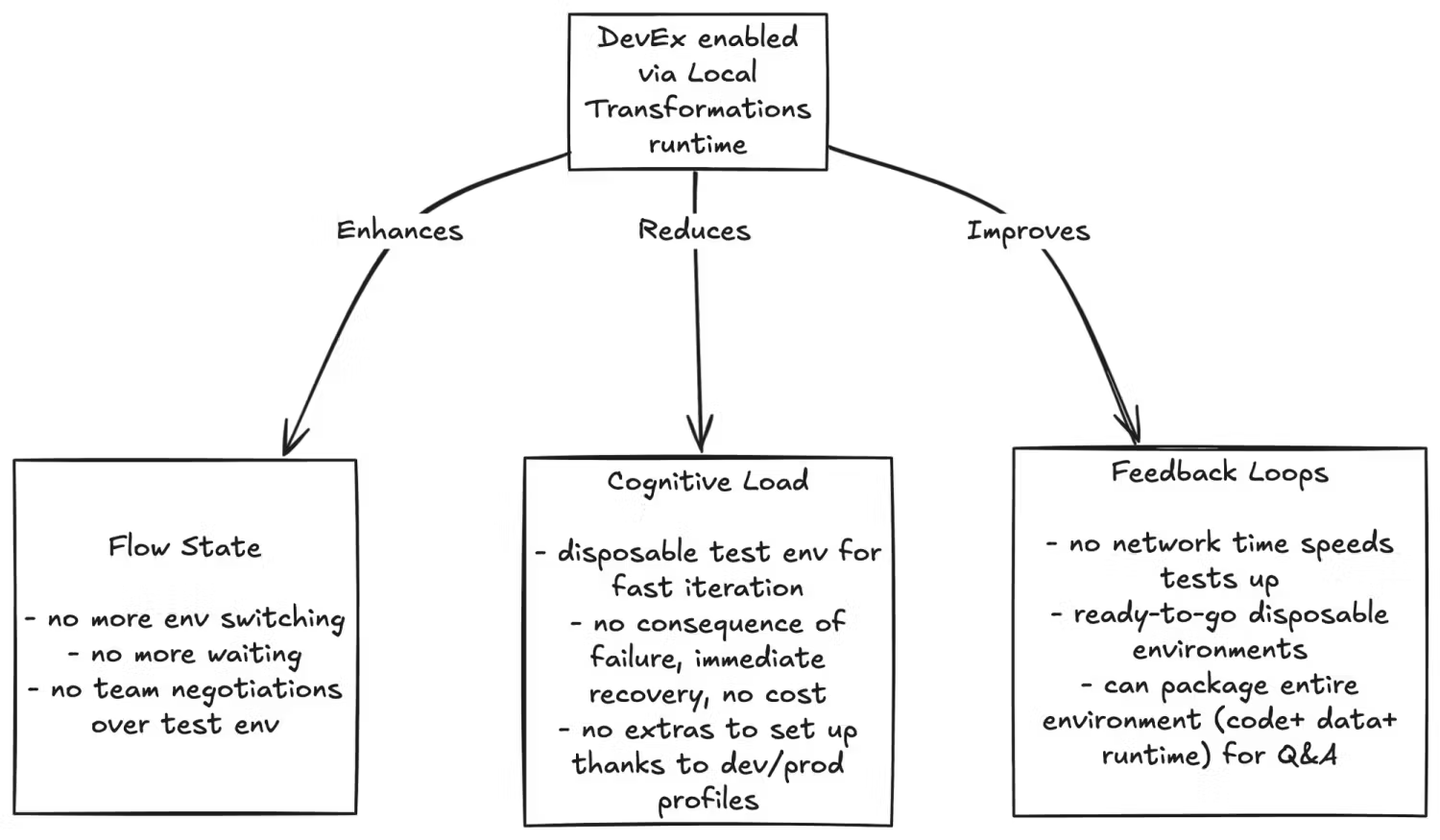

ELT Made Prototyping Easy. dlt+ Cache Makes It Production-Ready.

Data teams were promised a faster way to build pipelines. What they got was a system where every transformation runs in production, every schema change is a potential outage, and debugging means burning warehouse credits just to see what went wrong.

This isn’t sustainable. ELT doesn’t need more monitoring, more orchestration, or more governance tools, it needs a real development workflow.

Software engineers wouldn’t push code to production without testing. Data engineers shouldn’t have to either.

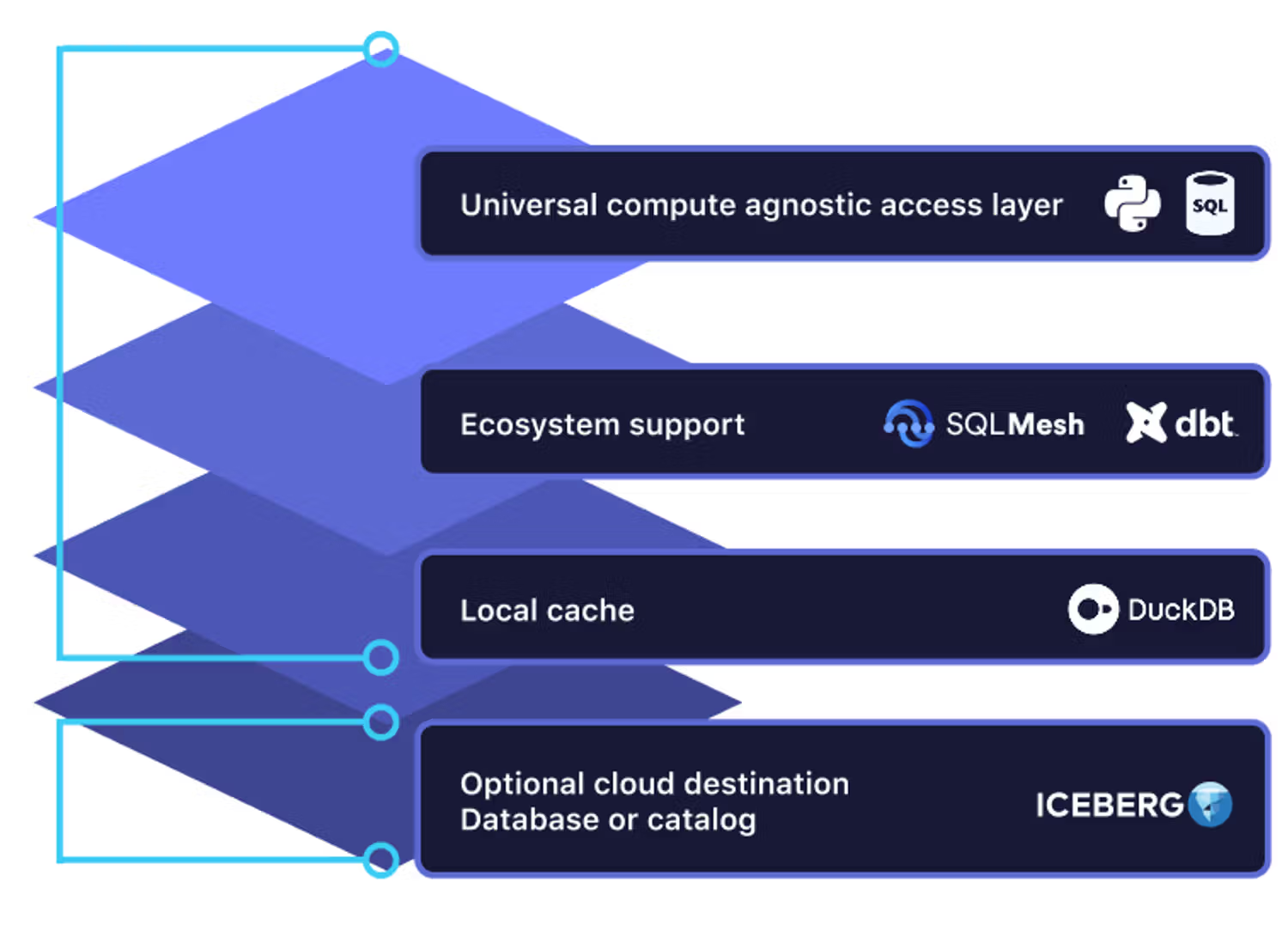

What is dlt+ Cache? → Read the blog

How does it work? → Docs for Cache concept, Setup

Goes great with → dlt-dbt scaffold generator (docs), blog