What is dlt+ Cache?

Adrian Brudaru,

Adrian Brudaru,

Co-Founder & CDO

The dlt+ Cache changes that.

This guide explains:

✔ What dlt+ Cache is and how it fits into modern data workflows.

✔ How it helps engineers test and validate transformations before deployment.

✔ How to get started with dlt+ Cache.

What is dlt+ Cache?

A portable compute layer for developing, testing, and validating transformations - before they hit production.

The problem we solve: Data engineers don’t have a proper development environment.

Software engineers don’t test code in production. Why do data engineers test transformations in the warehouse?

Right now, debugging a transformation means:

❌ Running queries at full scale, burning cloud credits.

❌ Waiting on slow warehouse execution just to check a basic fix.

❌ Getting pinged by an analyst while a 20-minute dbt run locks up the pipeline.

The problem isn’t the data. It’s the workflow.

“Do you know how much money it would cost us if every pull request had to load data into Snowflake and run tests? That’s insane.”

– Josh Wills

If you’ve ever waited 20 minutes for a dbt run, or rerun a failed job just to check a minor fix, this is for you.

“But I Have Staging At Home”

A staging warehouse is not a real testing environment. It’s just production with a softer cushion.

In software, developers don’t test by deploying to a second production server and hoping for the best. They have fast, isolated environments where they can break things safely, fix them quickly, and move on.

If your “staging” still costs significant money, runs at full scale, and takes forever to execute, it’s not really staging, it’s just a budget version of production.

A real testing environment should let you:

✅ Run transformations instantly, without waiting on warehouse execution.

✅ Validate schema changes before they cause failures.

✅ Test without paying for every rerun.

That’s what dlt+ Cache is for.

The Fix: dlt+ Cache

dlt+ Cache provides a staging layer for data transformations—a way to test, validate, and debug without running everything in the warehouse.

✔ Run transformations locally → No waiting for warehouse queries.

✔ Validate schema before loading → Catch mismatches early.

✔ Test without burning cloud costs → In-memory execution means no wasted compute.

What is dlt+ Cache?

dlt+ Cache is a complete testing and validation layer for data transformations, combining a local compute runtime with schema enforcement, debugging tools, and integration with existing data workflows.

How does it work?

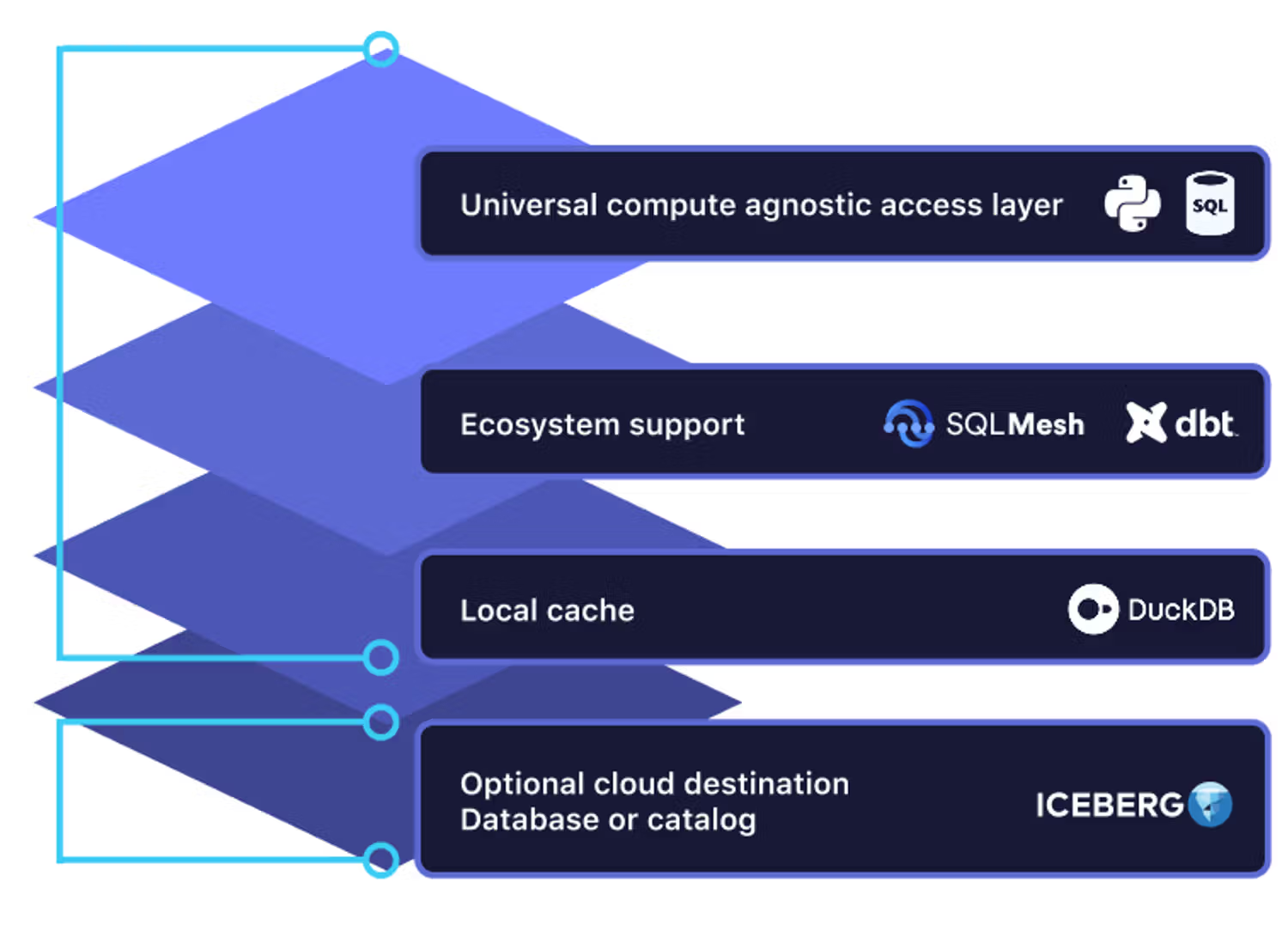

The dlt+ Cache is essentially a composable database, with an engine-agnostic interface. Around this, we build an ecosystem to enable using it for both development and production workflows.

Example dlt_project.yaml with the cache:

caches:

github_events_cache:

inputs:

- dataset: github_events_dataset

tables:

items: items

outputs:

- dataset: github_reports_dataset

tables:

items: items

items_aggregated: items_aggregatedAnatomy

- Universal access api: It accepts your code, be it python, standard sql, or various sql flavours like Duckdb, Postgres, Redshift, BigQuery, Snowflake, Clickhouse etc, and translates it to the dialect of the compute engine it uses under the hood. Read more in the datasets blogpost.

- A compute engine. If you do not provide one, a DuckDb will be inited for you ad-hoc.

- A storage destination - Data has to live somewhere. This can be any of the common storage destinations like SQL DBs and filesystem (storage buckets) with iceberg/delta/parquet formats.

- Ecosystem components to enable common production patterns

- a dbt & sqlmesh runner that allows you to run transform on the cache

- a fast-sync of transformed data to a serving destination, such as MPP SQL dbs or files + Catalogs

- a dlt-dbt generator that leverages metadata to scaffold dbt staging projects for dlt (sqlmesh variant is available in OSS)

Supported workflows

dlt+ can be used in many ways, but we suggest the following 2 workflows, replacing the same thing you currently do on the cloud. This enables faster development and higher data quality and lower cost.

- Staging in production. By using dlt → dlt+ cache → fast-sync to destination (for example Snowflake or Iceberg+ Polaris), you can do tests, data contracts or transformations before putting data into your production environment. This would essentially enable you to choose your compute engine for your test and transform workloads instead of being locked into the destination’s compute.

- Development testing. When we develop, we test everything from assumptions about the data, to our own code. By using the local cache, we remove or reduce waiting times and costs, and also solve the need for dev environments. Thanks to the cache’s universal access interface, once we developed our code locally we can also run the same code in prod.

Try dlt+ Cache

dlt+ Cache is the missing development layer for data engineering. Stop debugging in production, burning cloud costs, and waiting for slow queries, start building pipelines the right way.

Read more about dlt+ Cache in our documentation (wip).

🔹 Want early access? We’re rolling out dlt+ Cache to select teams. Sign up here!