SQL Benchmarking: comparing data pipeline tools

Aman Gupta,

Aman Gupta,

Data Engineer

Transferring data from SQL databases to data warehouses like BigQuery, Redshift, and Snowflake is a common part of data platforms. And with various tools available, how do you choose the right one for your needs? We conducted a detailed benchmark test to answer this question, comparing popular tools like Fivetran, Stitch, Airbyte, and the data load tool (dlt).

Our benchmark approach

We analyzed these tools across several key parameters:

- Cost

- Speed

- Ease of use

- Customizability

The dataset: TPC-H benchmark

We used the industry-standard TPC-H dataset for our benchmark, consisting of:

- Total size: ~6.42 GB in PostgreSQL

- 7 tables

- 24,604,860 rows

Environment used

For Airbyte, Fivetran, and Stitch data, we used their native cloud environments. For dlt, we used Google's Cloud Run to deploy the pipelines as jobs, utilizing the following configuration:

- vCPUs: 8

- Memory: 16 GB RAM

Hosting and Destination

- Database Hosting: PostgreSQL 15 on GCP Cloud SQL

- Destination: BigQuery

Key findings

Speed Analysis

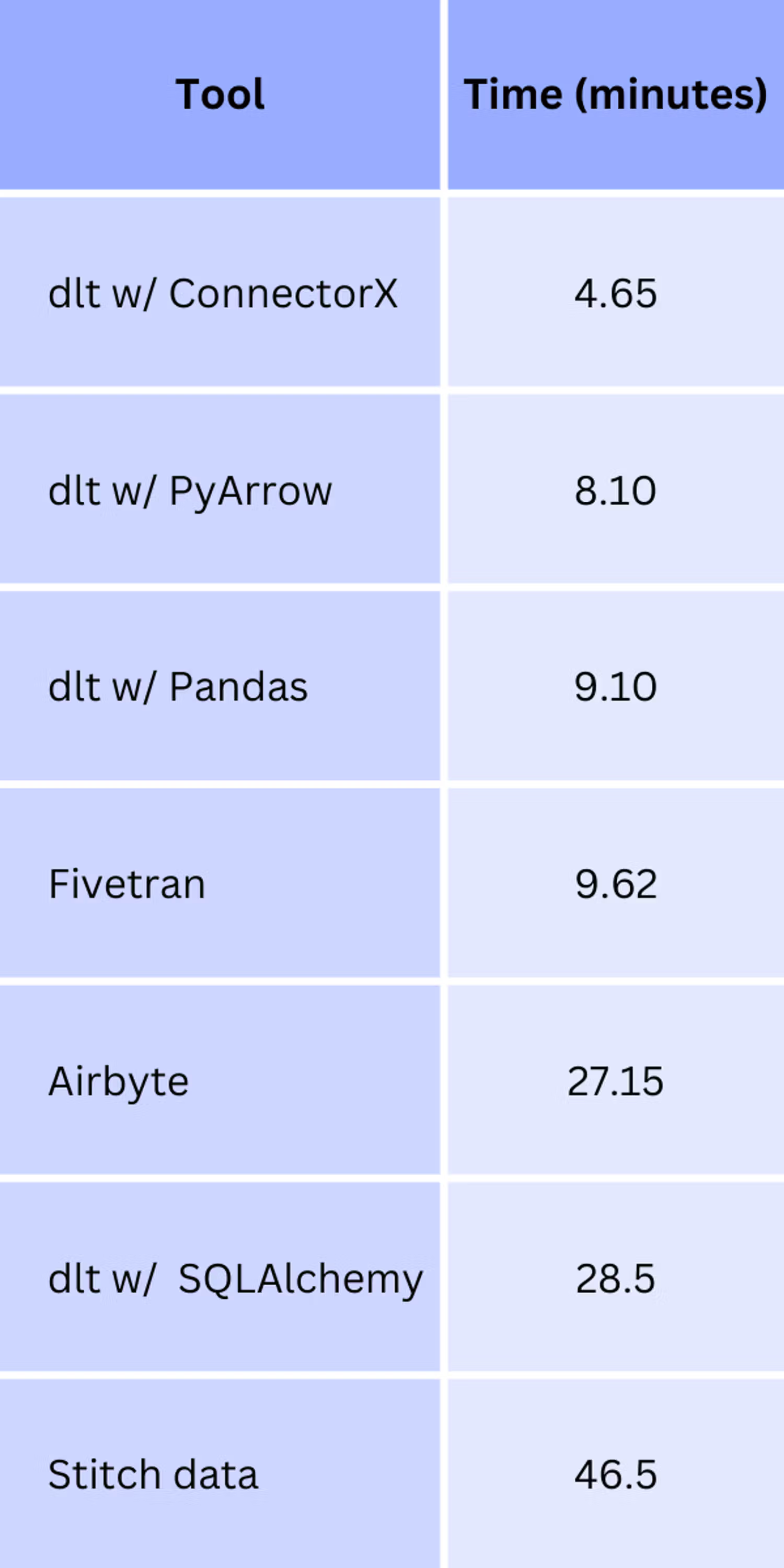

Here's how the tools performed in terms of pipeline execution speed:

Key Takeaways:

- dlt using the ConnectorX backend was fastest

- 2x slower than ConnectorX: dlt with Pyarrow or Pandas, Fivetran

- 6x slower: Airbyte, dlt sqlalchemy backend with default performance configuration.

- 10x slower: Stitch data

Cost analysis

With a sync frequency of once daily, here's how the tools compare in terms of monthly cost:

Key Takeaways:

By using dlt for your once-daily syncs, you can achieve substantial cost savings, spending only a fraction of what you'd pay with Airbyte, Fivetran, or Stitch Data while effectively managing your data pipelines.

Performance Tuning for dlt

The two different types of approaches (Infer types vs capture types) scale differently when tuned. Let’s look at ConnectorX and SQLAlchemy backends.

1. ConnectorX:

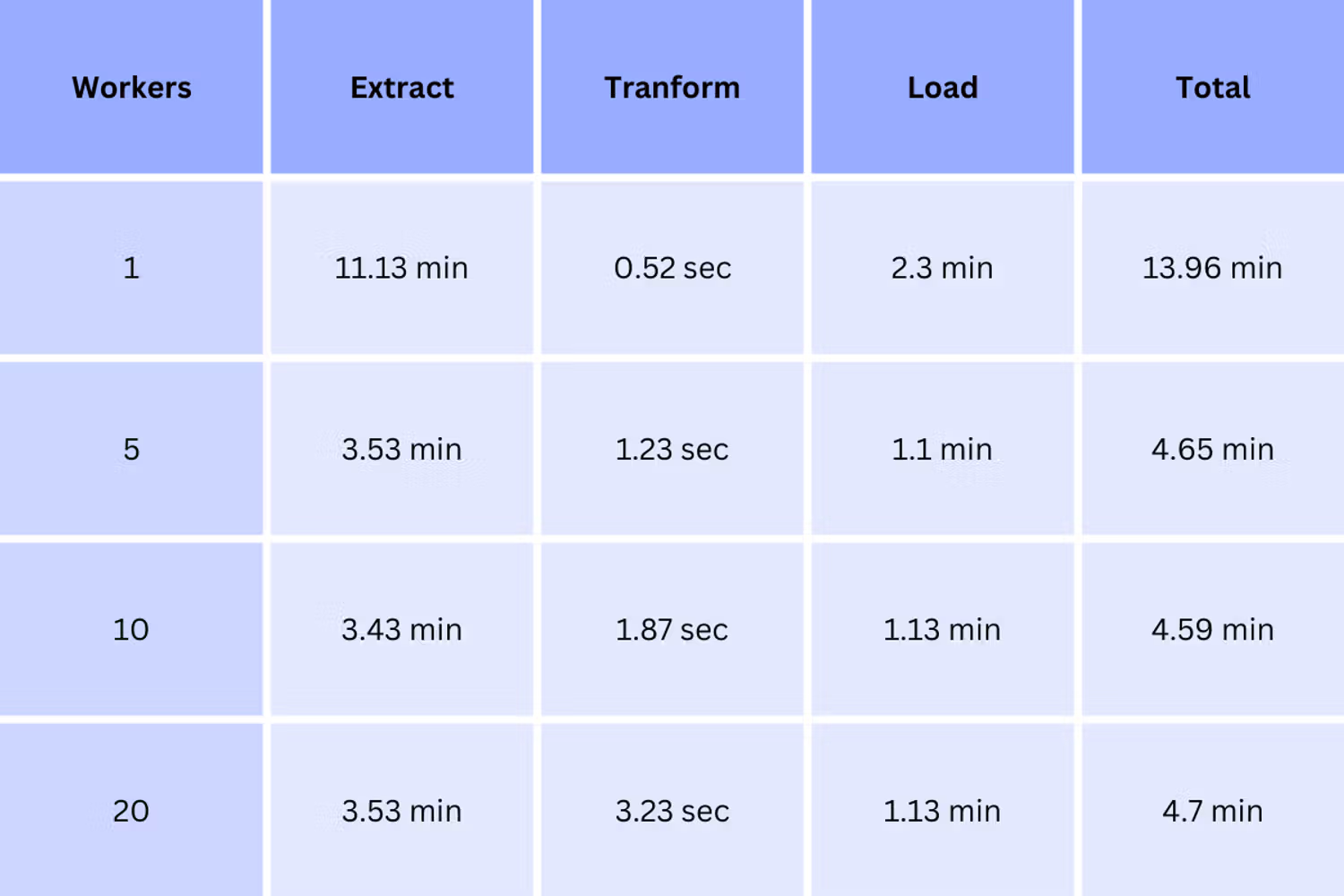

1.1 Parallelization impact

dlt has the option of using parallelized extraction while running the pipeline. Here's how parallelization affects:

- Without parallelism:

- Extract: 10.9 min

- Normalization: 0.56 sec

- Load: 1.13 min

- Total time: 12.58 min

- With parallelism (Using five workers):

- Extract: 3.53 min

- Normalize: 1.23 sec

- Load: 1.13 min

- Total time: 4.65 min

Key Takeaways:

- Time Reduction: Total pipeline time decreased significantly from 12.58 minutes to 4.65 minutes with parallelization.

- Extraction: Extraction time dropped from 10.9 minutes to 3.53 minutes due to parallel processing.

- Load Time: Load time remained consistent at 1.13 minutes across both configurations, indicating no change with parallelization.

1.2 Worker configuration impact

Next, we experimented with how the number of workers in extract, normalize, and load affected the pipeline run times. Since using ConnectorX means we do not have to transform the data, the transform time represents only the overhead of handing the chunks. A small amount of parallelism helps, but not a lot since there are limits on the database and network throughoput/ transfer time.

Key Takeaways:

- Scaling the workers from one to five provided a significant speed boost

- However, increasing the worker count beyond five offered minimal additional gains due to reaching the limits of source/destination answer time and network throughput.

2. SQLAlchemy:

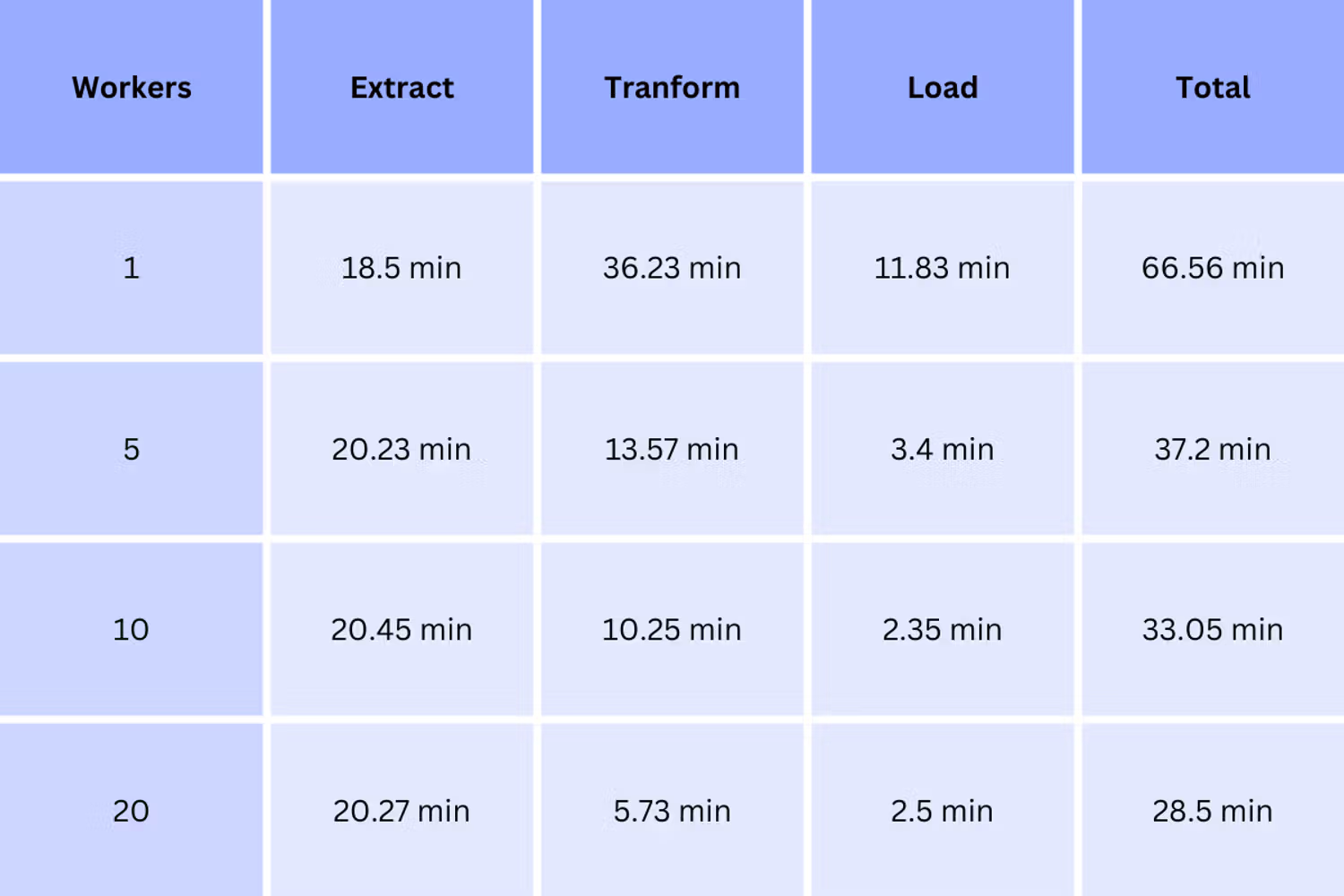

2.1 Parallelization impact

- Without parallelism:

- Extract: 18.5 min

- Normalization: 36.23 min

- Load: 11.83 min

- Total time: 69.5 min

- With parallelism (Using twenty workers):

- Extract: 20.27 min

- Normalize: 5.73 min

- Load: 2.5 min

- Total time: 28.5 min

Key Takeaways:

- Normalisation time reduced 84% by using parallelism

- Load time reduced 86% with parallelism

- Extract unaffected; Likely db or network bottlenecks.

2.2 Worker configuration impact

Since the SQLAlchemy backend deserialises and re-serialises the data, transformation time is large and can be reduced significantly with parallelism.

Overall, leveraging parallelism we are able to reduce the time to 40%, where we can no longer accelerate it due to network and database limitations.

Key Takeaway:

A little bit of parallelism goes a long way, but in the normalisation step we can make the biggest difference by using more compute.

Conclusion

This thorough benchmark demonstrated a few things:

- Saas vendors offer unoptimised solutions at 100-1000x price over cost

- Network and API bottlenecks limit pipeline performance significantly

- Python performance in EL is not a bottleneck. Optimisations like faster languages or more hardware have insignificant returns if code is optimised.

Need help optimising your pipelines?

Already a dlt user?

Boost your productivity with our new pip install “dlt[workspace]” and unlock LLM-native dlt pipeline development for over 8,800+ REST API data sources.