cognee: Scalable Memory Layer for AI Applications

Vasilije Markovic,

Vasilije Markovic,

Founder Topoteretes

The following article is written by dlt community user Vasilije, who uses dlt in his open source product, a semantic layer for AI. Read more about how cognee uses dlt and Motherduck on the Motherduck blog.

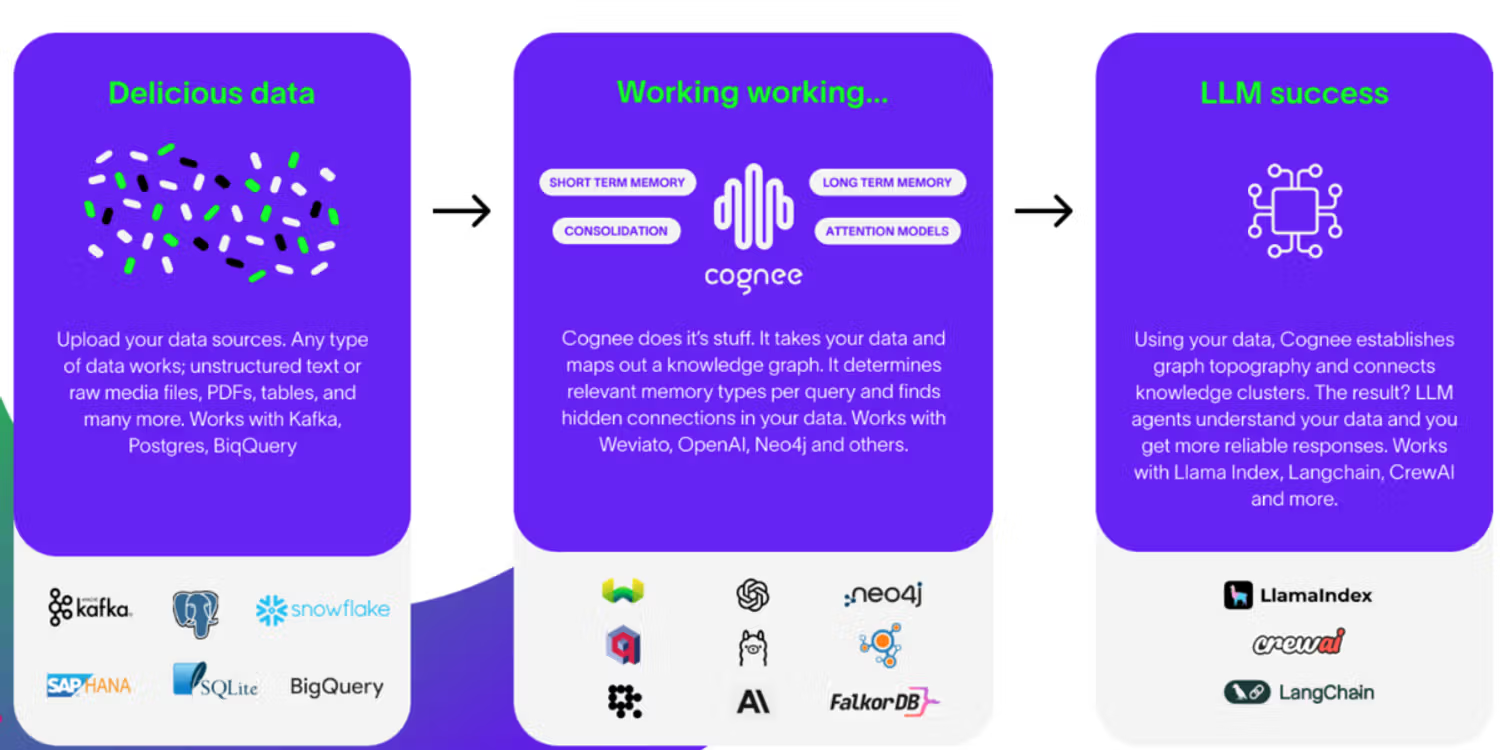

AI applications and Agents often require complex data handling and retrieval systems. Enter cognee, an open-source, scalable data layer designed specifically for AI applications. cognee implements modular ECL (Extract, Cognify, Load) pipelines, allowing developers to interconnect and retrieve past conversations, documents, and audio transcriptions while reducing hallucinations, developer effort, and cost.

Why cognee?

I started building the tool after going back to university to study psychology and seeing how understanding the context of a new environment changes the way a person thinks and influences them to understand and act on things differently. Around the same time, I noticed AI Agents appearing, and I felt we needed a better way to manage their context windows than what exists currently.

What Is cognee?

cognee is an open-source, scalable semantic layer designed for AI applications. It uses modular ECL (Extract, Cognify, Load) pipelines to help you interconnect and retrieve past conversations, documents, and audio transcriptions. This reduces hallucinations, cuts down developer effort, and saves costs.

Why Should You Care?

Data engineering for AI can be complex and time-consuming. Vector databases are not easy to use and after reaching some 70% accuracy, you usually are stuck with optimization techniques that don’t lead anywhere. cognee makes it easier by providing a reliable and flexible framework to create semantic layers. We simplify and extend the data layer so you can focus on creating great AI experiences.

Key Features of cognee

Modular ECL Pipelines

cognee's core strength lies in its modular ECL pipelines. These pipelines allow you to extract data from various sources, process and cognify it using AI models, and load it into your desired storage systems. For us, cognification is a part of building semantic layer of relationships and entities that can help LLMs reason better. Compared to traditional RAG, you have much more flexibility and control in managing your pipelines.

Graphs

cognee works on implementing graphs as a semantic layer in order to allow LLMs to reason better and understand their working context in a way it can improve results. We mainly focus on graph generation, retrieval and evaluations in order to build a more robust way to query vector/graph databases.

Flexible Storage Options

cognee supports multiple storage solutions for both vector and graph data. Whether you prefer LanceDB, Qdrant, PGVector, or Weaviate for vector storage, or NetworkX, Neo4j or FalkorDB for graph storage, cognee has you covered. This flexibility allows you to choose the storage solutions that best fit your project's needs.

LLM Integration

Integrate your preferred Large Language Models (LLMs) with cognee. You can use providers like Anyscale or Ollama to power the cognition phase of your pipelines, enabling advanced data processing and graph generation.

Getting Started with cognee

Installation

Installing cognee is straightforward. You can use pip or poetry to install it, with optional support for PostgreSQL:

pip install cogneeFor PostgreSQL support:

pip install 'cognee[postgres]'Basic Usage

Start by setting up your environment:

import os

import cognee

os.environ["LLM_API_KEY"] = "YOUR_OPENAI_API_KEY"

Alternatively, set your API key using cognee's configuration:

python

Copy code

cognee.config.set_llm_api_key("YOUR_OPENAI_API_KEY")You can also configure other settings in a .env file.

Creating Custom Pipelines

cognee allows you to create custom tasks and pipelines tailored to your business logic. Tasks can be independent units of work, such as data classification or enrichment, and can be combined into pipelines for complex data processing workflows.

For example, you can create a task to classify document chunks using an LLM:

async def chunk_naive_llm_classifier(data_chunks, classification_model):

# Task implementation here

Then, include it in your pipeline:

pipeline = run_tasks([chunk_naive_llm_classifier], documents)Use Cases and Examples

cognee is ideal for developers who need a reliable data layer for AI applications. Whether you're building a recommendation system, conversational AI, or any application that requires efficient data retrieval and processing, cognee simplifies the data engineering process.

Here's a simple example of using cognee to add text and search for insights:

import cognee

import asyncio

from cognee.api.v1.search import SearchType

async def main():

await cognee.prune.prune_data()

await cognee.prune.prune_system(metadata=True)

text = """

Natural language processing (NLP) is an interdisciplinary

subfield of computer science and information retrieval.

"""

await cognee.add(text)

await cognee.cognify()

search_results = await cognee.search(

SearchType.INSIGHTS,

"Tell me about NLP",

)

for result_text in search_results:

print(result_text)

asyncio.run(main())Future Developments

We're constantly working to improve cognee. Upcoming features include:

- Enhanced web UI

- More vector and graph store integrations

- Improved graph generation, retrieval and evaluations

- Improved scalability

- Integrated evaluations for optimal performance

Join Cognee community

Have questions or want to contribute? Join Cognee's Discord community and check out our documentation. We're transparent and always open to feedback.

Discord invite link: here.

GitHuB link: here.