Portability principle: The path to vendor-agnostic Data Platforms

Adrian Brudaru,

Adrian Brudaru,

Co-Founder & CDO

As data engineers, we like being empowered. Open source technologies like Iceberg are a promise in that direction - scalable vendor agnostic data platforms.

And while we all agree vendor locks are bad because they enable unreasonable pricing, there’s way more depth to the conversation besides cost.

What is portable compute?

We normally do not talk about portable compute in programming because programming runtimes are portable by default.

So let’s spell it out - what are we missing in databases? Let’s define the meaning of portability in our context:

- Interchangeable: A portable compute component can be replaced or swapped with another compute resource without significant changes to the rest of the data stack. For example, I could use Spark, DuckDB or Snowflake without having to re-write any code.

- Environment agnostic: It runs across multiple environments: Local, prod, on-premises, cloud, hybrid. For example, a compute engine could run on my laptop, get deployed to AWS while the free credits last, and could easily move it to GCP afterwards.

A history lesson on why the database ecosystem is not portable

In the early days of computing, both programming languages and databases were taking shape. Over time, they diverged in fundamental ways, especially in how they handled standardization, portability, and vendor lock-in.

Programming for programmers, databases for the organisation

Programming languages were designed for developers, who needed tools to write code that would work across different systems. The demand for portability and cross-platform functionality came directly from them because they needed their applications to run on various hardware and operating systems. This developer-centric focus drove languages to standardize and emphasize portability.

Databases, on the other hand, were designed for organizations and businesses with a focus on stability, performance, and long-term support. As a result, businesses were more willing to accept vendor lock-in if it meant getting the performance, support, and features they needed.

When in doubt, follow the money

Database vendors realized early on that they could control the market by creating proprietary features to make it hard to switch.

Programming languages didn’t face the same profit-driven incentive to lock people into specific ecosystems. Instead, the community-driven nature of language development, combined with the rise of open source, pushed for openness and cross-platform capabilities.

While programming languages pursued portability, databases followed a different trajectory—toward fragmentation and vendor lock-in.

SQL: A step toward Standardization… and another towards vendor lock.

The introduction of SQL (Structured Query Language) in the 1970s was supposed to be a unifying force. SQL was a standardized query language that theoretically could work across different databases.

However, while vendors agreed to support SQL, by adding proprietary features, they re-created the vendor lock-in that SQL standardisation was supposed to solve. Vendor goals were simple: keep customers tied to their ecosystem for as long as possible.

Cloud databases: Now we lose local-online portability.

With the rise of cloud computing, things got even more fragmented. Cloud providers like AWS Google Cloud and Azure introduced proprietary, cloud-native databases. These databases were optimized for their specific platforms and came with deep integration into their cloud ecosystems. While these databases provided scalability and speed, they also reinforced cloud-based vendor lock-in.

The current state of open compute

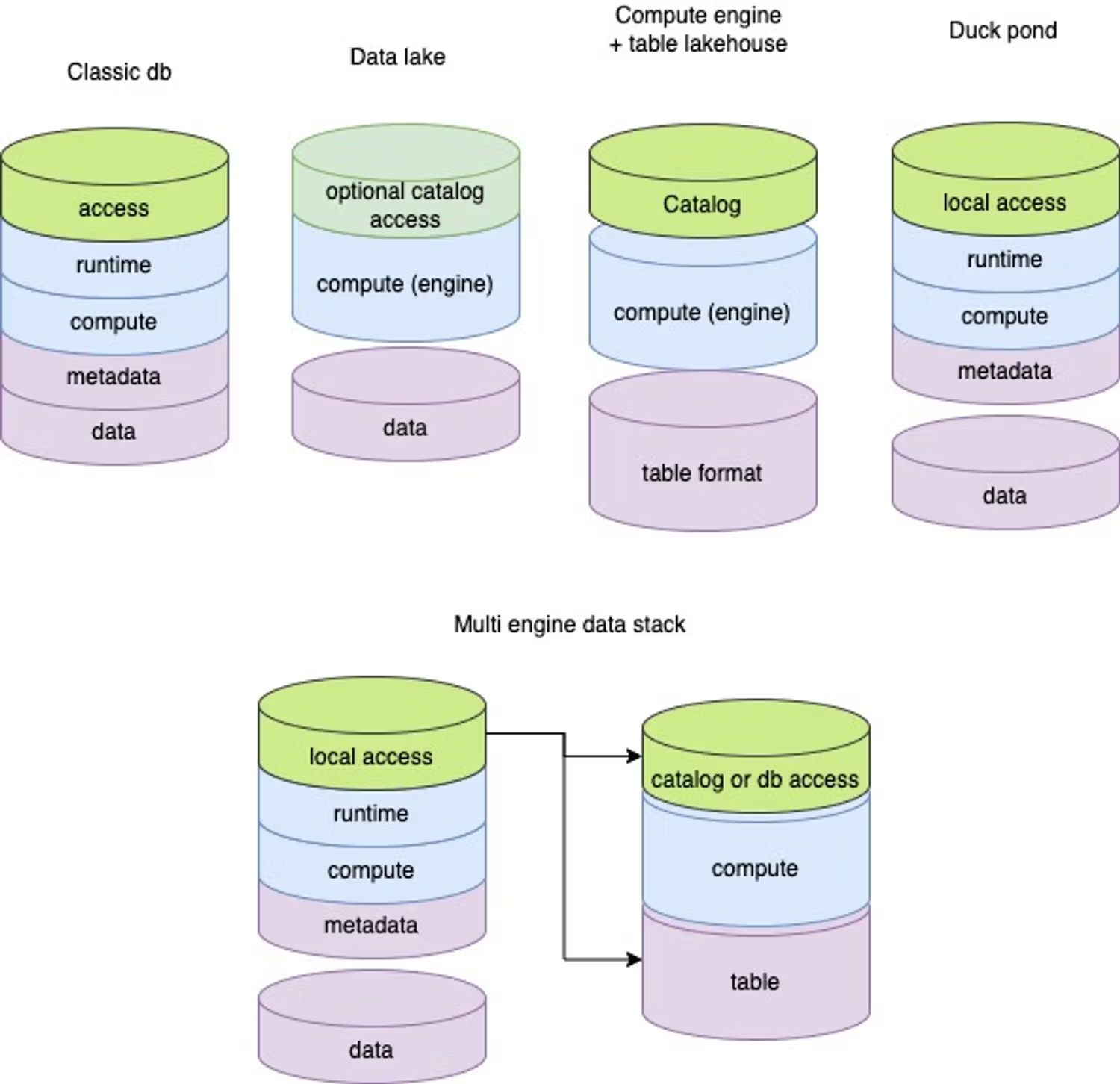

Currently, if you want portable compute, you have a few options in that direction, but none of them takes you all the way. Your options might look like this:

- Use an OSS dbms like Postgres or Clickhouse and scale it yourself.

- Data lake. Now we can do (python) programming instead of SQL, which is portable. Optionally manage access via catalog.

- Lakehouse. Cloud portable, some interchangeable compute.

- Duck Pond - a local duckdb+dbt pipeline that processes data. Scale it by putting it on different infras.

- Multi engine data stack: Use something for processing, such as Duck pond, and something else for serving - database or catalogue.

In other words, you can sometimes shift environment but your code will depend on runtime.

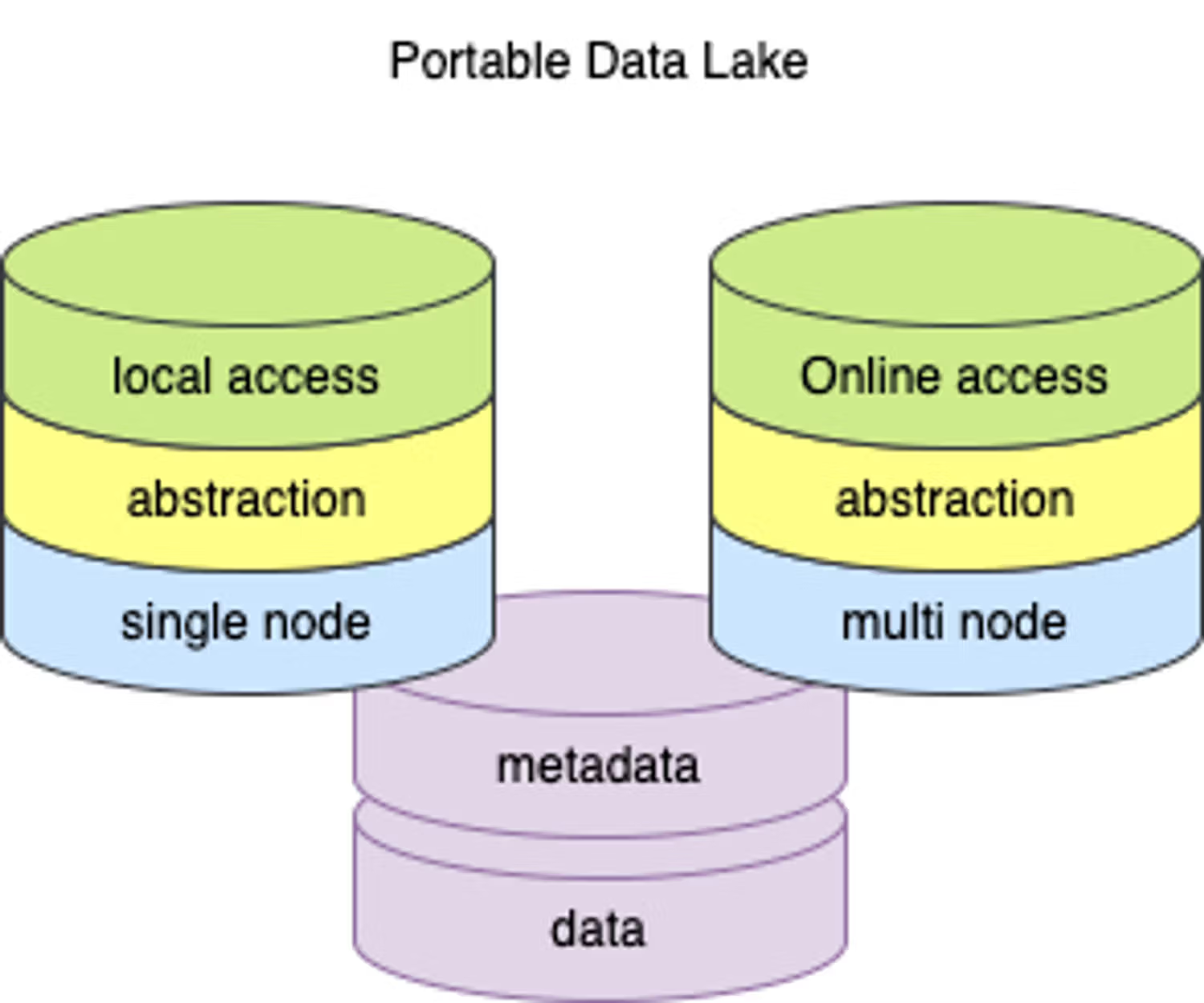

Achieving compute-agnosticism with an abstraction layer

The final piece of the open compute portability puzzle is technology agnosticism. Adding an abstraction layer on top of the compute can serve as a standard.

This would enable, for example to run the same code locally on DuckDB as we do on the cloud on Snowflake, for instance. This would mean doing away with the extensions offered by the different databases (which is how the vendor lock is created), essentially prioritising portability over special functionality.

Local doesn’t mean just your computer

It’s worth reiterating that local in this context doesn’t only mean your computer. It means portable. It means airflow workers, docker images, or just about any runners anywhere. This means you could run “duckdb local” on serverless github actions and sync the outcomes to another online location.

Similar to the thinking behind the multi engine data stack, we can treat the final data destination as a simple online high speed serving layer, whether that’s a catalogue or a database.

Examples in practice:

- Harness uses dlt + SQLMesh to achieve vendor agnostic SQL, developing locally on DuckDB and running in prod on BigQuery.

- PostHog writes headless delta lake with dlt

Extensions as a governed service

But what if your favorite database has that sweet extension that you just gotta use? How to keep standardisation and use customisation?

The answer could be to decouple the usage of an extension from the core sql access. If the data exists somewhere externally of a vendor system, we could wrap the specific functionality into a separate service, which could be programatically governed though the same access permission system governing the rest of the data.

Catalogs now function like a vendor lock the same way databases did it 50 years ago.

Ultimately, that data needs to be surfaced somewhere. Vendors currently use catalogs to enforce lock-in by deeply integrating metadata management, access control, and performance optimizations into their ecosystem.

When you adopt a vendor's catalog, like AWS Glue or GCP Dataplex, it becomes central to how you manage schemas, data access, and security. This tight coupling means the catalog is optimized to work exclusively with the vendor’s storage and compute services, making it difficult to seamlessly move data and workflows to another platform without significant effort.

The lock-in deepens because these catalogs often offer advanced features—like query optimizations or automated data partitioning—that are vendor-specific. Moving away from the vendor requires replicating these functionalities on another platform, often at the cost of rebuilding parts of your data stack. This creates a dependency that makes switching vendors a technically complex and resource-intensive process.

The future is portable.

Reducing risk with metadata portability.

Semantic data contracts are coming.

Semantic data contracts define rules for how data is structured, accessed, and governed, starting from the moment data is ingested. Similar to data mesh governance APIs which validate such rules, semantic data contracts allow governance to be baked in upfront and executed at runtime, ensuring that data is well-governed no matter where it flows.

A data catalog contains the kind of metadata that is expressed in a semantic data contract. This means that the catalog itself in this case becomes a simple reflection of the contract. This lowers the risk of further vendor locking by reducing the online catalog to an access point, instead of a source of metadata truth.

Breaking vendor locks ignites competition

Vendor locks are put in place to create a barrier to competition. This means that removing these locks pushes vendors towards more competition, potentially leading to an acceleration of development of solutions in this space.

This may also lead to increased inter-operability, as we are already starting to see. Vendors will need to fit their offering into how consumers use their solutions. At the same time, consumers might adopt these abstraction layers so they can take advantage of the competitive ecosystem, opening new opportunities.

The portable data lake is a bridge to the future

The current state of the ecosystem towards breaking vendor locks is best described as “incomplete”. By creating a portable data lake as a kind of framework where components are vendor agnostic, we are able to take advantage of the next developments quickly. Read more about the portable data lake.