Portable data lake: A development environment for data lakes

Adrian Brudaru,

Adrian Brudaru,

Co-Founder & CDO

What if we had a portable data lake?

- complete with catalogue of sources,

- cache,

- SQL and python runtime

- runs locally or online, in a common way, with no differences.

- that can be shared to other colleagues for collaboration

- and easy deployment to production?

1. Current state of local development

Local dev environments used to be a problem for all developers

In software engineering, setting up local development environments was a huge pain 10 years ago. It was common for engineers to spend hours, if not days, trying to replicate production setups on their local machines. But over time, tools like Docker and advances in CI/CD pipelines have mostly solved this problem, giving software engineers a smooth local dev experience.

For data professionals working with data lakes, though, we’re facing the same challenge software engineers dealt with a decade ago. Setting up local environments to work with large-scale data is still a struggle, with issues around data access, scalability, and governance. We often spend too much time configuring environments rather than building and testing solutions.

What complicates the problem is that the solution is not the same as in software engineering - data problems use different tech stacks, and they need data. Similar to how data mesh is a recipe for applying microservices to data, what we need for consistent local development is also an adaptation that makes data and its governance a first class citizen.

Emergent solutions for data devs: data ponds

Data pond. What is it? It’s a simplified, flexible version of a data lake that enables data engineers to work with smaller, manageable datasets without the complexities of full production environments. In practice, a data pond allows quick local experimentation and development, providing a space to test ideas, build prototypes, and iterate rapidly. Engineers can use it to bypass the heavy governance and scale-related challenges of a fully governed data lake.

However, the trade-off is that data ponds come with notable limitations. While they allow for faster iteration, they lack the structure and governance that production environments require. This means that crucial elements like data quality, security, and compliance are often overlooked. When moving from a data pond to production, engineers might face unexpected issues—what worked locally may fail to scale, or critical governance policies might be missing.

Additionally, data ponds often don’t replicate the complexities of real-world workloads. While they’re helpful for prototyping, they don’t offer the same robustness or ability to simulate large-scale data processing, meaning engineers may miss performance bottlenecks or scalability challenges until they reach production. In short, a data pond allows you to move fast, but it doesn’t always help you move to production.

Examples of bad data ponds:

- A google drive folder containing 4x 1.5GB CSV and 120 more 150GB files which are versions of the same 3 tables. What? How? I don’t know, will probably take months to untangle. Oh, user data too? Who has access to this? ffff……

- My personal s3 bucket with copies of everything. I might need it someday, the code doesn’t run without it…

- The data in Downloads/users_v12.csv you forgot to delete. Right to be forgotten? indeed

- My pycharm project or git repo.

2. A necessary direction

The gap remains

So, while data ponds help address the need for speed in local development, they fall short as reliable environments for testing production data systems.

The answer might lie in building a more opinionated, governed solution: one that brings the agility of a data pond together with the controls of a data lake. This leads us to the concept of a governed data pond: a local development setup that maintains agility but incorporates the necessary governance and scalability required for transitioning smoothly to production. Or in other words, a portable data lake.

Proposing a Solution: The portable data lake - a pip install data platform

Why pip install? A distinguishing feature of local-production parity is the ability to install the technologies you want anywhere - otherwise, they would not be portable. While they don't technically need to be pip install, the expression underscores the concept and trend.

Current state of data pond:

- :) it contains a dev environment for dev work

- :) it often contains a runtime that enables you to run your code.

- :) it contains data that enables you to develop.

- :( It misses governance and as a side-effect, it misses an easy path back into prod.

- :( it misses portability and parity between local and online technologies.

- :( it’s a custom, “single player” environment that is unsuitable for team collaboration.

But what data pond has going for it, is that it's more or less a stack of pip installable technologies. So if it works on local, it could work on cloud too. The other way would be more difficult.

Our list of “Shoulds”

- We should have uniform governance without overhead, so we can integrate our work back into prod. Things like versioning, source catalogs and profiles with preconfigured access rights.

- We should leverage open standards to enable local to online technology parity.

- It should be cost-optimised because development can be intensive. Low egress/ingress fees, cheap storage and flexible compute are the relevant topics.

- Unified development environment - something the whole team can use, consistent enough in its deployment but also offering the freedom of how to solve the problems. dbt and SQL should be supported equally well as Python and spark. A runtime for these things should be included in the pond.

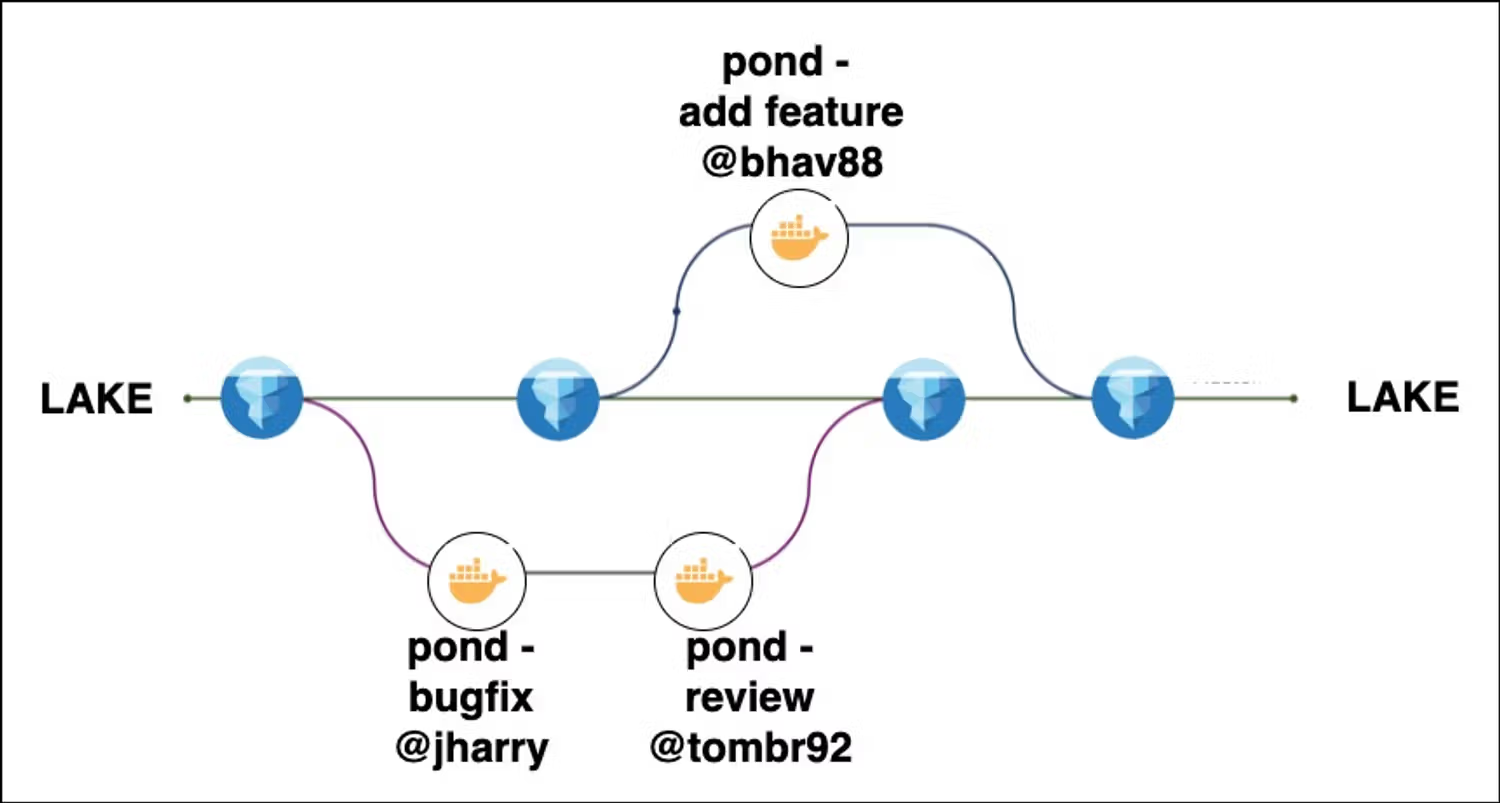

- We should have portability of this environment, another team mate should be able to review or build on it.

- Vendor agnostic interface: By using components like dlt and ibis, we can achieve a single flavor of code that runs on any vendor’s tech. dlt schemas and ibis universal sql/python wrapper make this possible. Write SQL or python once, run it anywhere, and break vendor locks. Additional benefit: Separate business logic from runtime flavor. Your business logic should run anywhere.

- Yaml enabled workspace is a nice to have, because a declarative layer simplifies the configuration of this environment.

It’s starting to look like Github/Docker for local data development. Except, we need a data runtime inside it too. Our portable data lake might contain DuckDB, S3, Arrow, Iceberg, Delta and other such technologies stitched together with governance.

Our proposed solution’s key features

- Integrated Data Cache - A smart caching system that minimizes latency and costs, enabling rapid data exploration, transformation, and visualization, while syncing back to the lake or warehouse.

- Lake Engine - A local data lake engine that enables technological parity to an online data lake. This would enable running things like dbt core, sql queries and python code against the data without reaching for online components.

- Governed pipelines - Similar to how data mesh uses governance apis for semantics, dlt uses semantic data contracts to solve the same issue without overhead. This enables governed access and creation of data products that are usable across the wider organisation.

- Unified data access - A universal python/sql layer that maps to any runtimes under the hood keeps a separation between business logic and vendor runtime flavors enabling common code across teams, runtimes and future developments.

- Fast-track to production - Imagine a quick-sync between your local lake env and your colleagues - or merging it into production. By packaging this environment into something as easy as a CLI install, it’s not just a cache sync for data, but also an easy way to get your code from a local prototype to a governed data product. Code deployment, data movement, and governance all come together to navigate over these hurdles in a fast, pre-agreed way.

- It’s a portable, configuration-first environments that enables all of the above.

What are the outcomes of implementing the portable data lake?

Implementing a portable data lake brings several key outcomes that benefit both data engineers and organisations. Here's what you can expect:

Relentless efficiency

Deliver data products without getting bottlenecked.

- Faster development: The portable data lake accelerates software development and collaboration by simplifying environment setup and deployment, providing a uniform interface for the team. The local cache enables rapid experimentation.

- Cross-Team collaboration: Removing vendor locks improves collaboration across larger organizations, allowing teams using different technologies to access and work with the same data seamlessly.

- Optimized compute: The portable data lake opens up compute flexibility, enabling teams to choose the best option for their specific needs, whether local or cloud-based.

- Less complexity: By decoupling development from production, teams can use local or cached data while maintaining consistency with the data lake. Engineers focus on building, not managing infrastructure.

- Reduced Costs: A mix between online or local execution minimizes reliance on cloud services, cutting down cloud resource usage and costs.

- Agile technology choices: Built on open standards like Delta Lake and Parquet, the portable data lake ensures no vendor lock-in, giving teams the flexibility to adopt new technologies or stick with familiar ones as needed.

Governance, Data Democratization, and Collaboration

The portable data lake ensures robust governance while enabling seamless collaboration across teams.

- Data Mesh Ready: Semantic data contracts validate datasets, just as data mesh governance APIs do. These contracts enforce access policies across different environments, such as restricting PII data from AI teams or other sensitive use cases.

- Unified Data Access: A consistent interface allows everyone—from analysts to engineers—to work with the same data while maintaining governance controls.

- Empowered Teams with Safety Controls: Role-based policies ensure that teams can develop, test, and share data independently. For instance, PII data might be restricted from AI teams during development, while analysts may have limited write permissions until the pond is deployed to production.

- Automated Data Accuracy: Semantic data contracts enforce data quality checks and validations, ensuring data accuracy and minimizing errors throughout the pipeline.

- Effortless Auditing: Built-in data lineage tracking simplifies auditing and ensures compliance with regulations like GDPR or HIPAA, with minimal manual effort.

3. Build It with dltHub

At dltHub, we've been dedicated from day one to accelerating data workflows from development to production. We believe that data engineering should be as agile and efficient as modern software development. That's why we've developed tools that embrace open standards and streamline the entire data pipeline.

Introducing the portable data lake with dltHub

Our portable data lake leverages open standards like Delta Lake, Parquet, and Iceberg to provide you with significant efficiency gains. But it's more than just saving time—it's about unlocking new development paradigms that elevate data engineering to the level of sophistication found in software development. Here's how dltHub makes it happen:

- Integration with Open Standards: By building on open formats, we ensure compatibility and avoid vendor lock-in. Your data remains portable and accessible, giving you the freedom to choose the best tools for your needs.

- Accelerated Development Cycles: With our OSS tool package, you can quickly ingest, transform, and load data using semantic data contracts. This ensures data quality and governance are embedded from the start, reducing errors and speeding up the path to production.

- Unified Data Access and Transformation: Our integration with technologies like DuckDB, dbt, and Ibis provides a unified interface for SQL and Python. Write your business logic once and run it anywhere, separating it from vendor-specific nuances.

- Collaborative Environment: The portable data pond is designed for team collaboration. Share your environment with colleagues effortlessly, enabling peer reviews, joint development, and smoother handoffs between teams.

- Governance Without Compromise: We embed governance directly into your data pipelines through semantic data contracts and role-based access controls. This means you can move fast without sacrificing compliance or data quality.

- Fast-Track to Production: Transitioning from local development to production is seamless with dltHub. Our tools handle environment setups, dependency management, and deployment, so you can focus on delivering value.

Join the Next Generation of Data Engineers

We're pushing the boundaries of what's possible in data engineering, bringing it closer to the agility and efficiency of software development. If you're passionate about innovating and want to be part of this transformation, we'd love to collaborate with you.

Or perhaps you are only interested in solving a sub-set of the described problems? Here's how we helped PostHog build an open standards data lake for thousands of users: Case study.

Partner with us to shape the future of data development. Join our waiting list or become an early design partner.