The feature we were afraid to talk about

Adrian Brudaru,

Adrian Brudaru,

Co-Founder & CDO

The scaffolds that worked most of the time, but sometimes were just trash

Every new technology starts with a good idea. For us, the arrival of powerful LLMs sparked one: what if we could automate the most tedious part of data engineering? What if we could point an AI at any API documentation and have it instantly generate a "scaffold", or context for LLMs to kickstart or maintain a data pipeline?

Explainer: A scaffold is a LLM context made of distilled source docs and rules. By creating a distilled source documentation in standardised terminology that would match the rules, we enabled even small context models to do the final assembly.

That was the promise of our v1 scaffolds. We built a pure, AI-native system. It read the docs, interpreted them, and produced a ready-to-use configuration. And honestly, it was a pretty good idea. It worked often enough to show a glimpse of the future, saving developers from hours of manual research.

But as practitioners, we know that for a tool to be truly useful, "a good idea" isn't enough. For an engineer, a tool that works "most of the time" is just a different kind of broken. The LLM, left to its own devices, would sometimes invent plausible but incorrect details, forcing a frustrating debugging loop that undermined the initial time savings. In that case, the model would either never succeed, or navigate to the original online docs and solve the issues, rendering our source-side of the scaffolds as more harm than good.

We weren't totally happy, because we wanted the source side of the scaffold to be helpful or at least not detract. So we went back, not to scrap the idea, but to perfect it.

The breakthrough came when we relied less on the expensive and unpredictable nature of LLMs. Instead, we switched to a deterministic parser with verification steps, to get a high quality information extract before filling in the gaps with LLM semantic parsing. The goal of v2 was to build a system that leverages the strengths of both retrieval systems.

The iteration: Use LLMs as little as necessary, fall back on deterministic programming

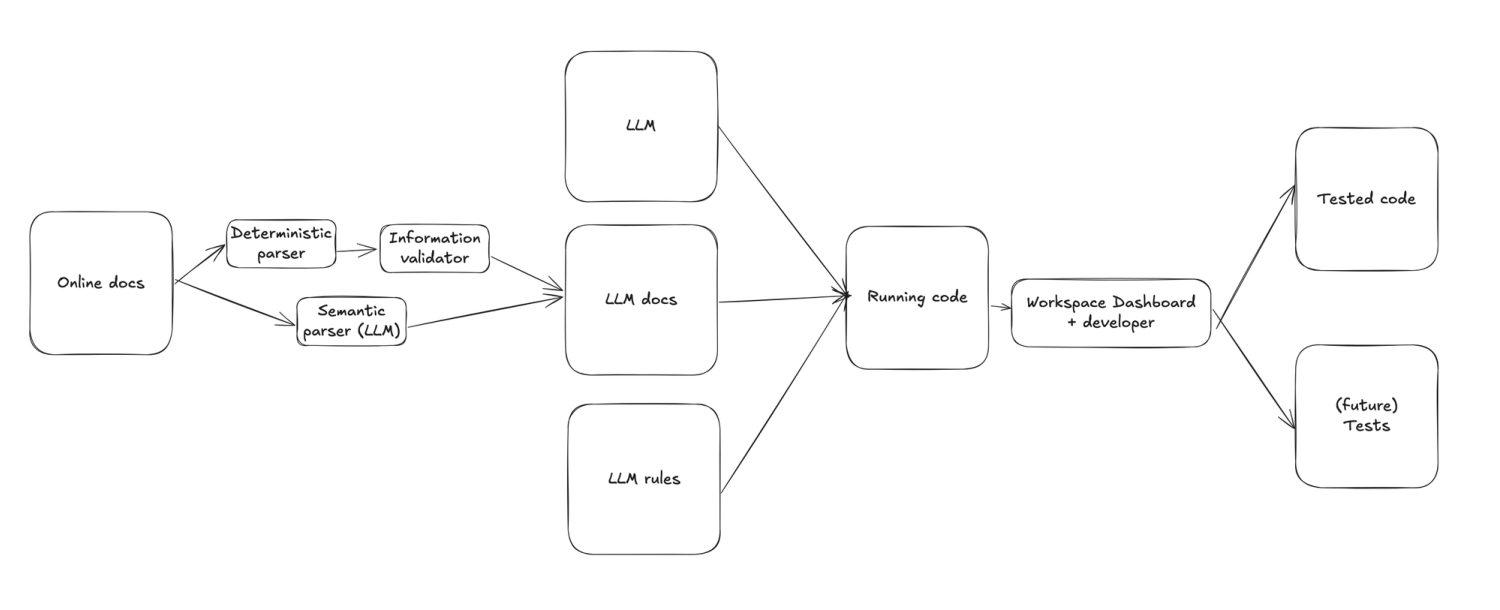

Here’s a look at how our new v2 workflow works, from scaffold generation to pipeline creation.

As the diagram shows, we implemented a hybrid approach. We needed to separate the job of finding facts from the job of understanding freetext meaning. The main improvement was grounding the LLM with facts and sources.

- Test-driven deterministic engineering first: Our new system now leads with a deterministic parser and information validator. This is pure, reliable code that scans the source’s documentation to identify and confirm hard facts, like API endpoints.

- AI for complex semantics: At the same time, an LLM-based semantic parser reads the documentation for the kind of context and nuance that code alone cannot grab like free-text notes on authentication or pagination.

- Pointers to reality: Finally, we noticed that LLMs would navigate to original docs when our distilled docs were insufficient, so we added explicit pointers back to original documentation to help them do that when needed.

The final scaffold you receive is a synthesis of these two paths. It’s a blueprint built on a foundation of verified facts, enriched with AI-driven insights.

The new scaffolds are dramatically more reliable. The "it just works" moments happen far more often. And because we now embed pointers back to the original documentation, the “this will never work” happens far less often.

We’re excited to share this new version because it represents a hard-won lesson in building with AI. Our scaffolds have evolved from an idea into a tool, and we’re happy it’s now useful enough to share with confidence.

We invite you to try them and feel the difference.

- Try the LLM native workflow

- or explore 3000+ LLM scaffolds

Already a dlt user?

Boost your productivity with our new pip install “dlt[workspace]” and unlock LLM-native dlt pipeline development for over 4,500 REST API data sources.