Migrate your SQL data pipeline from Fivetran to dlt

Aman Gupta,

Aman Gupta,

Data Engineer

Introduction

SQL exists in many flavors, including MySQL, Postgres, SQLite, Oracle, Microsoft SQL Server and many more. Often organizations need to send their SQL data to destinations like BigQuery, Redshift, Snowflake, and Databricks to enable advanced usage or analytical capabilities. Tools like Fivetran are good choice for migrating and syncing data from SQL to these destinations. However, there are scenarios where you might need more control and customization over your data migration. This is where open-source tools like come into play.

In this guide, we will explore how to migrate your SQL data pipeline from Fivetran to dlt. While we focus on MySQL in this example, a similar approach can be applied to other SQL databases.

The need for migration: Recognizing the call

We conducted a deep dive comparative analysis between Fivetran and dlt. Fivetran excels in ease of use, built-in logging, and monitoring. On the other hand, dlt offers several key advantages like cost, speed, customization, transformation capabilities, granularity, and control. The numerous advantages of dlt make it the better choice for data teams that run their own pipelines.

Major factors that make dlt a winner are cost and speed. In Fivetran, syncing 4 tables with 50k rows synced every 6 hours would cost roughly $1000 monthly. Now, if your tables have millions of rows, the cost can be prohibitively high. dlt places no such cost slabs based on the size of data; it is free no matter how big your data is, and running it on various infrastructures to load this data would cost somewhere in the region of $10/month give or take depending on provider. In this case study Talktile uses dlt with AWS lambda for creating a low-code, high volume data processing platform.

Read the full case study: How Taktile built their compliant data stack with dlt and Snowflake.

Speed is another critical factor. In dlt, scalability through iterators, chunking, and parallelization, as well as yielding pages instead of rows, makes it perform faster than Fivetran. Some dlt users have reported a performance boost of 5-10x when migrating from Fivetran to dlt. We did some benchmarking for speed comparing various dlt backends and Fivetran:

Dataset used: UNSW-NB15

Rows synced: 2.1 million

So, from the results above we can see that dlt improved loading speed by 3x.

In this other case study, Yummy.eu costs reduced by 182x and loads 10x faster by using dlt.

For any organization that wants to reduce its data ingestion costs and improve ingestion speed, open-source dlt seems to be a better choice.

Preparing for the migration

In this tutorial we’ll be migrating SQL-BigQuery pipeline running on Fivetran to dlt , as follows:

Step 1: Pause Fivetran pipeline

Let’s assume that you are using your already-built and running Fivetran pipeline. Our first step would be to pause the already running SQL pipeline as follows:

Steps to follow:

- Navigate to the "Connectors" section on the left panel.

- Choose the MySQL connector used for transferring data.

- Click the pause button to stop data syncing from MySQL to BigQuery.

- Note the date and time that you pause the pipeline on, as it is to be used in configuring the

dltpipeline.

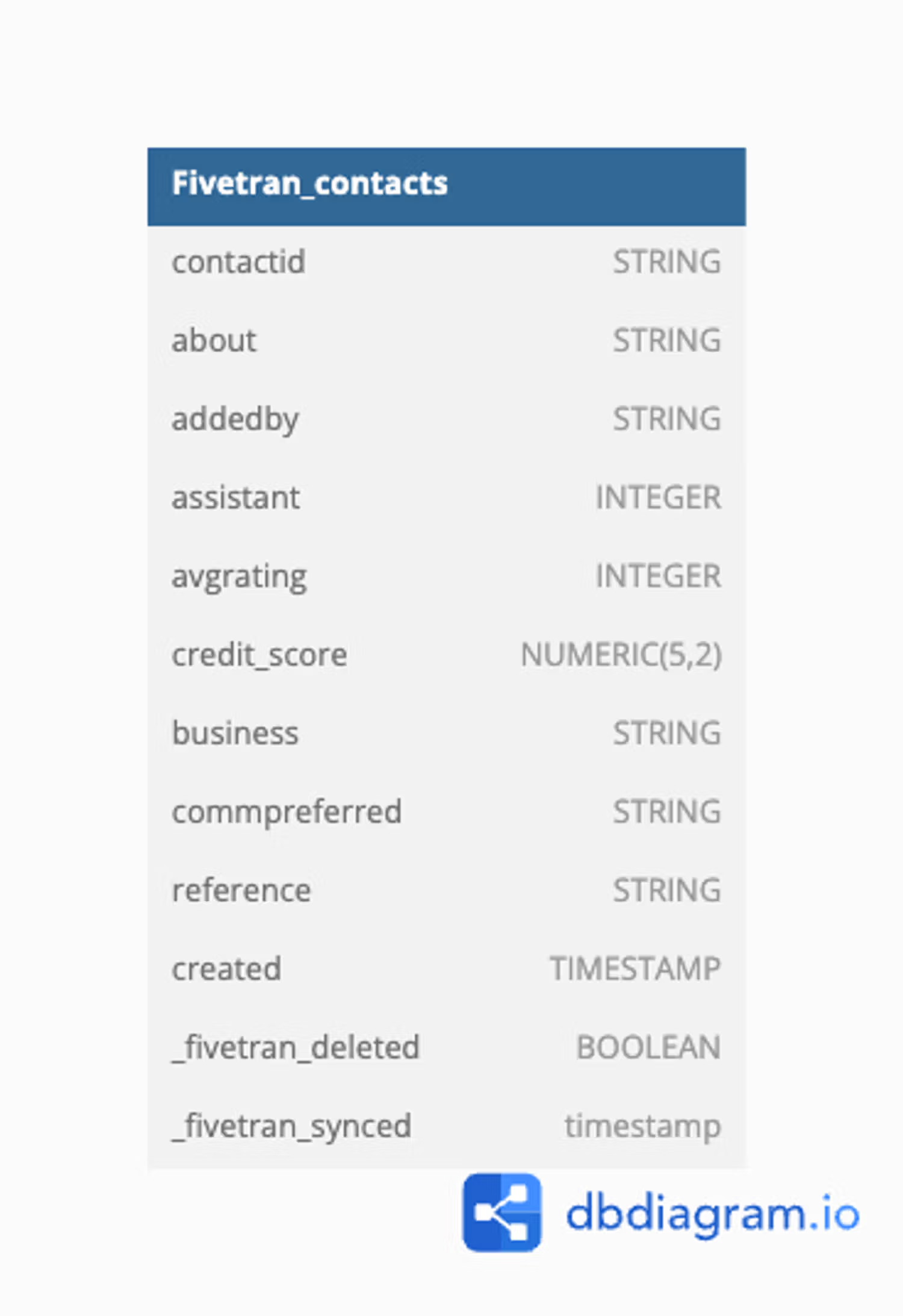

Let’s look at the “contacts” table created by Fivetran in BigQuery named “Fivetran_contacts”. Here’s the schema:

DB diagram: Fivetran_contacts.

IMPORTANT: In this guide, we will focus on migrating the 'contacts' table. The approach outlined can be similarly applied to migrating any other table from any SQL source or any other source.

Step 2: Create a new dlt Pipeline with incremental loading

With the Fivetran connector paused, any new data flowing into SQL post-migration cutoff needs to be captured. Here, dlt becomes our new vessel. And we create a pipeline to handle incremental loads, ensuring no new data is lost in transition.

Here are the steps:

2.1. Initialize the dlt Pipeline

To begin, initialize a dlt pipeline for SQL database. This involves setting up the pipeline with the desired source (SQL database) and destination (BigQuery, in this case).

dlt init sql_database bigqueryThis command creates a new directory with the necessary files and configurations.

2.2. Pipeline Setup Guide

To learn to set up the SQL database-BigQuery dlt pipeline, please read our verified source setup guide here.

2.3. Setup dlt pipeline

Next, we'll set up a pipeline to extract data from MySQL and load it into BigQuery. We'll configure this pipeline to load the data incrementally.

How to do incremental loading with dlt?

- Here is a walkthrough for SQL tables in our docs: Link to the new docs

- For more information about incremental loading, please refer to the official documentation here.

An example:

dlt enables you to do incremental loading. In this context, we need to give our pipeline a reference in time after which it should fetch the data from MySQL, so we’ll use the created column to fetch records created after the initial load date(which in our case is the Fivetran migration pause date).

dlt uses sources and resources to represent sources and their endpoints/tables. In the SQL pipeline, our source is the schema and the resource is a sql_table resource. We will specifically discuss how to load the "contact" table incrementally using the corresponding resource starting from an initial value (where we left off in 5tran). This will allow us to load data added after a specific date and time.

Here’s an example of a pipeline loading the “Contact” table incrementally.

def load_standalone_table_resource() -> None:

"""Load a few known tables with the standalone sql_table resource"""

pipeline = dlt.pipeline(

pipeline_name="mysql_database",

destination='bigquery',

dataset_name="dlt_contacts",

full_refresh=True,

)

# Load a table incrementally starting at a given date

contact = sql_table(

table="Contact",

incremental=dlt.sources.incremental(

"Created", initial_value=datetime(2024, 4, 1, 0, 0, 0))

),

)

# Run the pipeline

info = pipeline.run(contact, write_disposition="merge")

# print the info

print(info)In this function, created is used as the incremental key to load only new records only the new records from Contact table.

To learn more about the adding incremental configuration to SQL resources, please refer to our documentation here.

Step 3: Running the Pipeline

Now, you can run the pipeline:

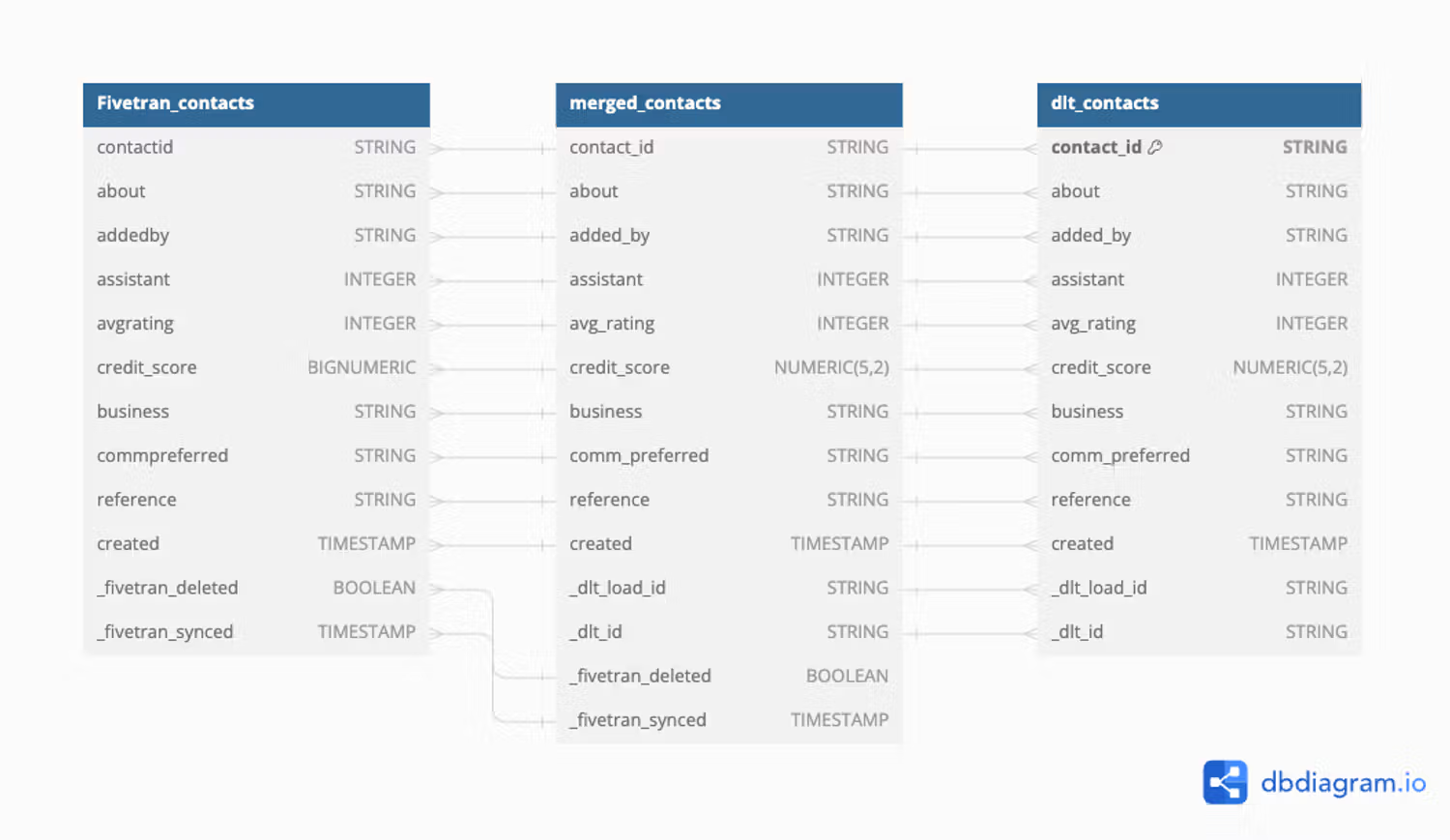

python sql_database_pipeline.pyHere’s the “Contact” table schema created by dlt named dlt_contacts:

DB diagram: dlt_contacts.

Staging layer: Why do we need it?

To create a final view that includes both historical data from Fivetran and new data from dlt, we will treat the Fivetran and dlt tables as staging tables. Staging tables serve as intermediate storage areas where data can be cleaned,

transformed, and validated before being loaded into the final destination. This approach facilitates the merging of datasets from both Fivetran and dlt, ensuring a cohesive and accurate final view.

Creating the unified views

Moving forward, we need to create a unified view where data from Fivetran and dlt converge into a single perspective. This merging process will be orchestrated using SQL views, ensuring an integration of both data sources.

CREATE OR REPLACE VIEW project-id.dataset.merged_contacts AS

WITH fivetran_contacts as (

SELECT

contactid as contact_id,

about,

addedby as added_by,

assistant,

avgrating as avg_rating,

credit_score,

business,

commpreferred as comm_preferred,

reference,

created,

CAST(NULL AS STRING) AS _dlt_load_id, -- dlt metadata

CAST(NULL AS STRING) AS _dlt_id, -- dlt metadata

_fivetran_deleted, -- Fivetran metadata

_fivetran_synced -- Fivetran metadata

FROM

project-id.fivetran_dataset.contact

WHERE created < '2024-07-01T12:30:02' -- datetime when Fivetran was paused

),

dlthub_contacts as (

SELECT

contact_id,

about,

added_by,

assistant,

avg_rating,

credit_score,

business,

comm_preferred,

reference,

created,

_dlt_load_id, -- dlt metadata

_dlt_id, -- dlt metadata

CAST(NULL AS BOOLEAN) AS _fivetran_deleted, -- Fivetran metadata

CAST(NULL AS TIMESTAMP) AS _fivetran_synced -- Fivetran metadata

FROM

project-id.dlt_dataset.contacts

WHERE

created >= '2024-07-01T12:30:02' -- datetime when Fivetran was paused

)

SELECT * FROM fivetran_contacts

UNION ALL

SELECT * FROM dlthub_contacts;This SQL snippet illustrates the merging of deals data from both Fivetran and dlt sources, ensuring a unified view.

dlt, careful attention must be given to naming conventions and data types. You can use the CAST function to change data types as needed, as demonstrated in the example above. Here is the schema for the merged view:

DB diagram: merged_view.

The methodology outlined in this guide ensures a smooth transition of your data pipeline from Fivetran to dlt .

About Metadata

In Fivetran, each row includes two important fields: _fivetran_deleted and _fivetran_synced. The _fivetran_deleted field is a boolean that indicates whether a record has been deleted in the source system. The _fivetran_synced field is a timestamp that marks the last time the record was updated.

To achieve similar functionality in dlt, we employ the SCD2 (Slowly Changing Dimension Type 2) incremental loading strategy. This approach introduces two additional columns to your dataset: _dlt_valid_from and _dlt_valid_to. These columns help us understand the temporal validity of the data, essentially tracking when a record was active in the source system. However, it's important to note that this method is applicable only to new data being ingested by dlt.

dlt, in contrast, assigns two key fields to each row: _dlt_id and _dlt_load_id. The _dlt_id uniquely identifies each row, while the _dlt_load_id is unique to each load operation. This metadata is essential for tracking and auditing data loads, particularly in the context of incremental loading.

Conclusion

In the article above, we explored the process of migrating the "contacts" table from a MySQL database to BigQuery. This methodology can be adapted for other databases like PostgreSQL, SQLite, Oracle, and Microsoft SQL Server, for migrating to various destinations supported by dlt. If you have a new destination in mind, you can either request it or contribute to the development yourself. The principles discussed remain consistent, regardless of the type of data. By following these straightforward steps, you can transition your data ingestion from Fivetran to dlt.

Call to action

Need to move from another vendor to the open source standard dlt and need help with the code generation? Want to spare your efforts and accelerate the migration?

Our Solutions Engineering team offers a paid drop-in replacement service that includes dbt package generation to assist in moving from your old solutions.