Stop writing SQL models and let your data pipeline do it automatically

Aman Gupta,

Aman Gupta,

Data Engineer

If you’ve ever spent hours debugging SQL models due to manual metadata handling, you know how painful it is. Imagine eliminating a chunk of the manual metadata work. That’s what dlt and SQLMesh do, automate metadata, reduce errors, and give you back three to five hours per pipeline.

Instead of wasting hours translating metadata manually, imagine your pipeline doing it for you, instantly.

The secret sauce - Metadata driven automation

Normally, after building a dlt pipeline, you’d move over and start repeating that logic in SQLMesh. Instead, we can run the dlt pipeline and use the metadata that dlt infers from the run, such as the data’s schema, to create a scaffold of SQL in SQLmesh.

This means that your data pipeline could write its own SQL models. With a single command:

sqlmesh init -t dlt --dlt-pipeline <pipeline_name> dialectYou can automatically generate SQL models that serve as a starting point for your transformations. These models mirror your ingested data structure while remaining fully customizable, saving a few hours of time and reducing the risk of errors compared to manually creating models from scratch.

SQLMesh models

With the sqlmesh init -t dlt command, SQLMesh creates models with proper incremental processing and schema-change awareness. It recognizes breaking changes versus non-breaking changes, typically something engineers have to manage manually.

The Bluesky API Example

Inspired by David SJ’s post, this approach transforms raw data from Bluesky API into structured SQL models.

Starting point - Raw data from the Bluesky API:

For this demonstration, we used dlt’s REST API source to pull follower and follow data from the Bluesky API.

import duckdb

import dlt

from dlt.sources.helpers.rest_client import RESTClient

from dlt.sources.helpers.rest_client.paginators import JSONResponseCursorPaginator

# Set blusky handle

actor = "chess.com"

# Bluesky API Client

bluesky_client = RESTClient(

base_url="<https://public.api.bsky.app/xrpc/>",

paginator=JSONResponseCursorPaginator(cursor_path="cursor", cursor_param="cursor"),

)

# Fetch Followers

@dlt.resource

def get_followers(actor: str):

for page in bluesky_client.paginate(

"app.bsky.graph.getFollowers",

params={"actor": actor, "limit": 100},

):

yield page

# Fetch Follows

@dlt.resource

def get_follows(actor: str):

for page in bluesky_client.paginate(

"app.bsky.graph.getFollows",

params={"actor": actor, "limit": 100},

):

yield page

# Initialize dlt pipeline with DuckDB

pipeline = dlt.pipeline(

pipeline_name="bluesky_pipeline",

destination="duckdb",

dataset_name="blusky_dataset",

)

# Run and store data in DuckDB

pipeline.run(get_followers(actor), table_name="followers", write_disposition="append")

pipeline.run(get_follows(actor), table_name="follows", write_disposition="append")Generating SQL models:

After executing the above script, run the following command: SQLMesh automatically produced versioned SQL models for follows and followers. This turned raw API data into SQL models, no manual SQL required.

sqlmesh init -t dlt --dlt-pipeline bluesky_pipeline duckdbBehind the scenes

Metadata-driven architecture

The pipeline automatically captures metadata during ingestion, which SQLMesh uses to understand your data structure. This handover of metadata makes the generation of SQL models possible by inferring the schema automatically from the pipeline run.

Why does this matter?

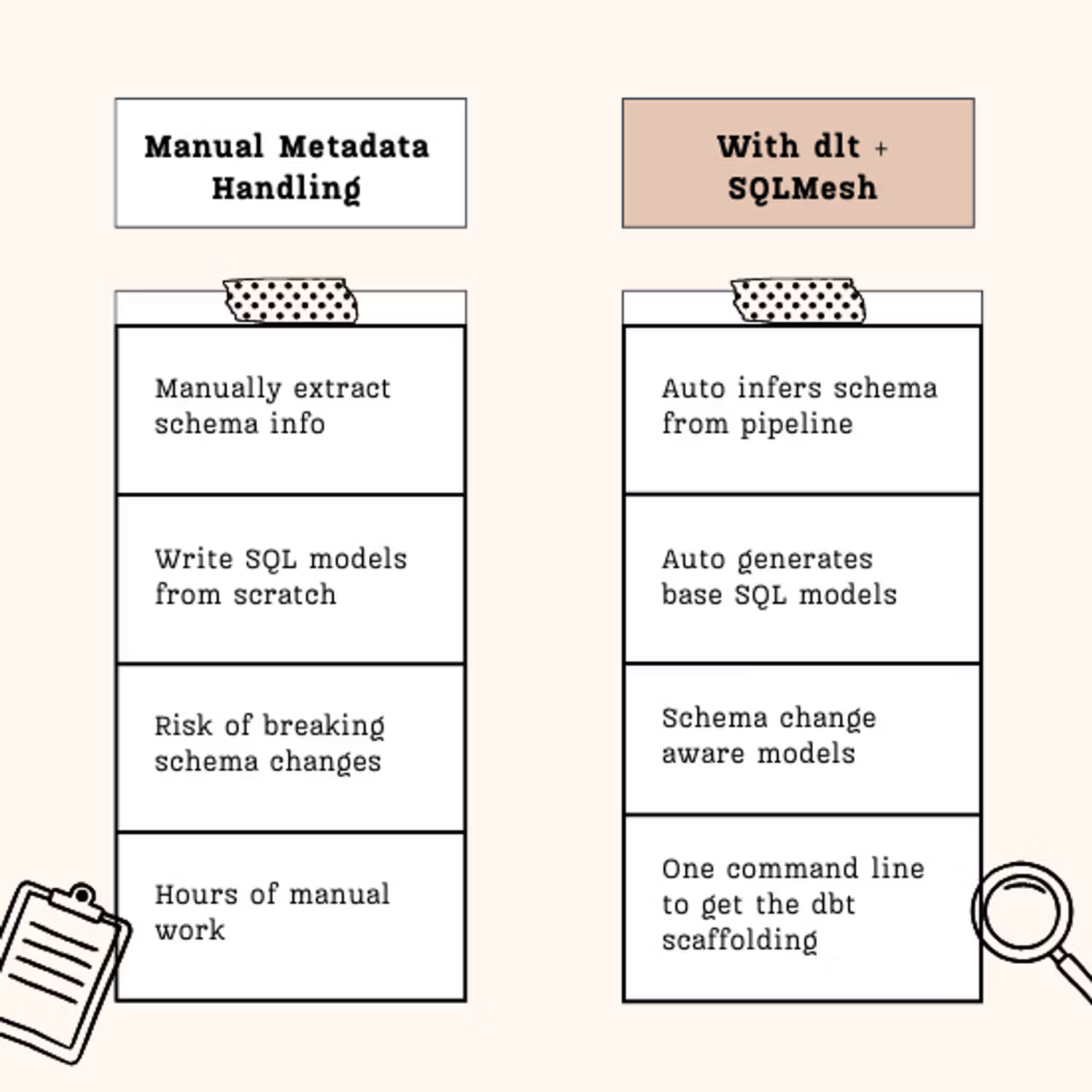

Instead of manually handling metadata, dlt + SQLMesh streamlines the process:

This means you can eliminate tedious, error-prone tasks and focus on refining your SQL models instead of maintaining them manually.

Technical deep dive

Anatomy of generated models

When SQLMesh auto-generates SQL models from your dlt pipeline’s metadata, it produces SQL for your data. Let's break down a typical generated model and highlight its key features:

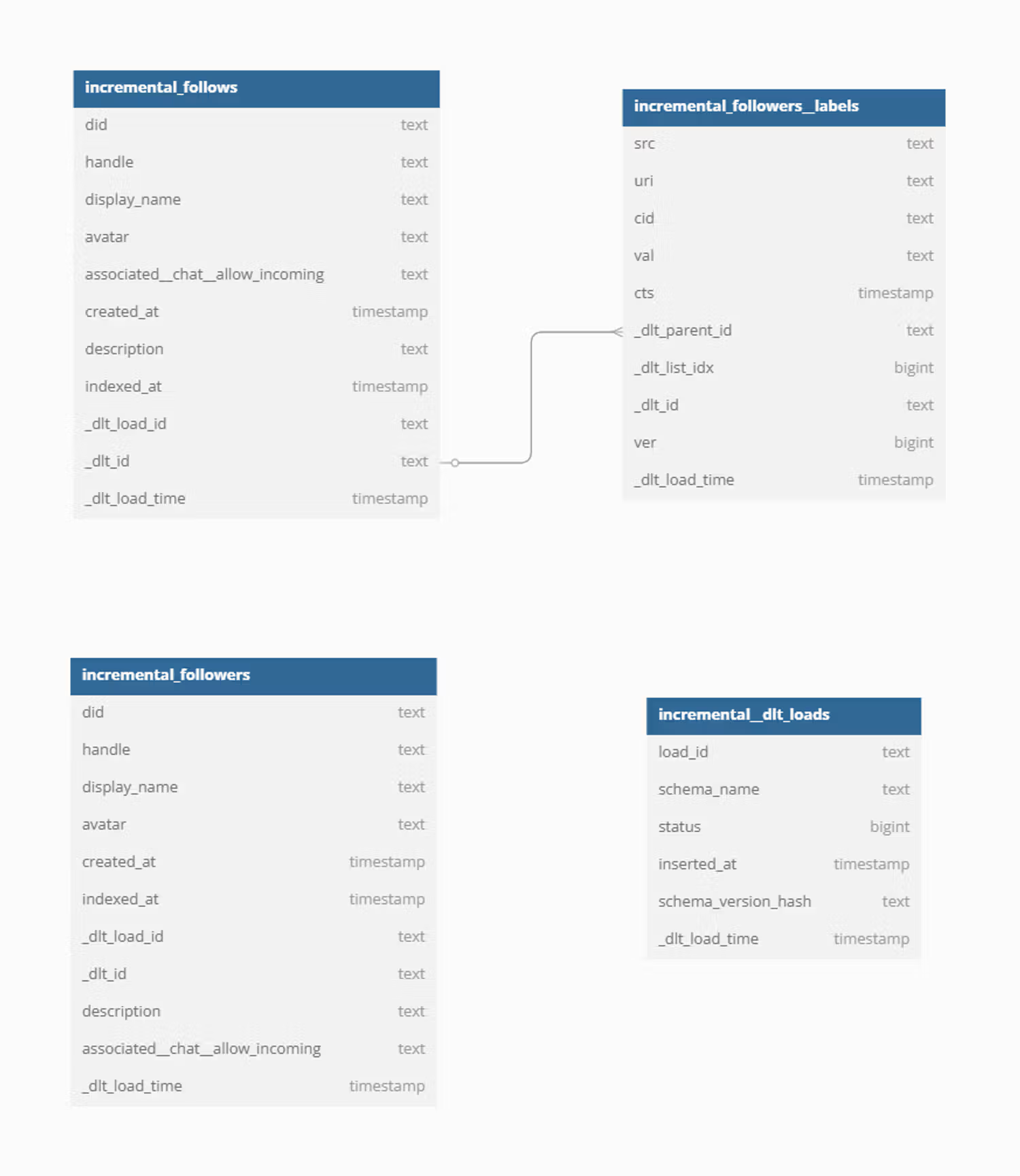

The image displays models generated by the sqlmesh init -t dlt command. Data types inferred by dlt are propagated to the SQL model, providing additional type checking and opportunities for managing updates or merges through a staging layer.

Incremental processing making life easier

dlt tags table load times with a _dlt_load_id, a Unix timestamp. This is reused in the generated SQL models to keep track of the data increments that have already been transformed. It means you get more time focus on adjusting your SQL models instead of setting up incremental loading.

How _dlt_load_id is propogated?

Usually, only parent tables have _dlt_load_id. The incremental_followers__labels model inherits it from its parent table followers through a join condition matching _dlt_parent_id with _dlt_id (read more about it here). This inheritance is taken care by SQLMesh automatically and enables incremental processing.

You can customize the incremental load window in your config.yaml.

SQLMesh also creates system tables: _auto_restatements, _environments, _intervals, etc. to track orchestration and versioning (learn more here).

Making it work for you

Tired of manually managing metadata? Install dlt & SQLMesh now and free up your time:

pip install dlt

pip install sqlmeshStart with a simple pipeline. Once it’s set up, run this one command and watch your pipeline generate SQL models automatically:

sqlmesh init -t dlt --dlt-pipeline my_pipeline postgresThis generates SQL models, just like the method described above.

Best Practices

- Keep your dlt pipeline focused on data extraction and enrichments.

- Use SQLMesh’s virtual environments for safe production and testing. Read more about environments here.

- Version-control your pipeline code.

- Use the

_dlt_load_idcolumn or other suitable columns likecreated_atorupdated_atfor incremental loading, avoiding full reloads.

Common Gotchas

- Always run your dlt pipeline first to generate the metadata.

- Nested structures may need manual optimization.

- Though most schema changes are handled automatically, backward incompatible modifications, such as column renaming, still require manual intervention.

Community and open Source

Both dlt and SQLMesh are open source and have active GitHub communities:

Check out the SQLMesh examples for more sample projects, and join the growing communities to exchange tips and insights.

Try it Yourself

Want to explore further?

Check out the GitHub repositories for both dlt and SQLMesh. You can also look into the SQLMesh examples repository for several standalone projects that help you get started.

Stop wasting time on manual metadata! Try it now, and tell us in our Slack community how much time it saved you.

We’d love to hear your experience!