We've been using LanceDB to make AI development smoother

Adrian Brudaru,

Adrian Brudaru,

Co-Founder & CDO

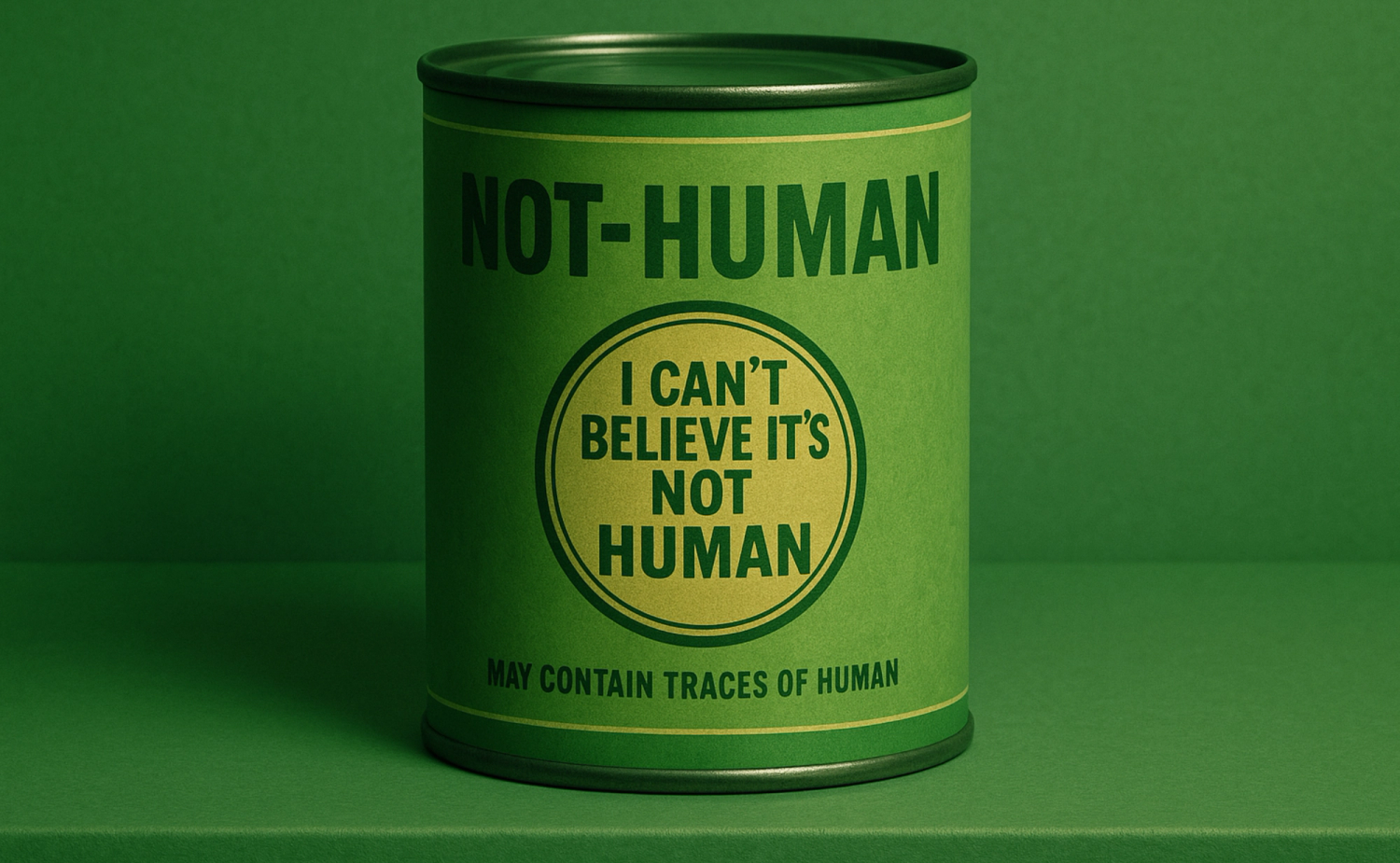

At dltHub, we build AI tools internally, including something we call our "Not-Human" sandbox where we test AI-generated content and code. We've been using LanceDB for months because we needed to store and search across different types of data: structured tables, text documents, embeddings, user feedback.

Most AI stacks are a mess. You end up juggling a vector database, a regular database, some feature store, and a bunch of scripts to move data between them. We wanted something simpler.

Why we started using LanceDB

We started using LanceDB before they launched this "Multimodal Lakehouse" engine. We just needed a database that could handle vectors alongside regular data without making us learn a bunch of new concepts. LanceDB made sense as a natural choice for fast iteration in our not-human project.

By putting together tools like dlt, LanceDB, Cognee and others into a sandbox anyone can use, we are able to quickly iterate and learn what works and what doesn’t in generative AI.

What we liked about it, in the words of our AI Engineer

1. Local and cloud work the same way

"Switching from local to cloud storage was just a parameter when initialising the DB."

You can prototype on your laptop and deploy to production with basically no changes. That's useful.

2. It's just a database

"It was still super nice to be able to design my DB entities the way I knew how (structured db) and didn't have to learn anything new."

Most vector databases make you think in terms of "collections" and "indexes." LanceDB just feels like SQL tables that happen to support vectors.

3. You can customise everything

"There was a lot of customisation I could put in, for search and choice of embeddings. Like integrating different things (like different embeddings for text and code) was super easy."

We use different embedding models for documentation vs code in the same table. Most systems make this painful while LanceDB makes it straightforward.

What's New with the Lakehouse

LanceDB just launched their "Multimodal Lakehouse" which adds Geneva, a processing engine that runs inside the database. Before, we had to write separate Python scripts to transform data before storing it. Now we can define those transformations as part of the database itself. Python functions that run distributed, with checkpointing so they don't fail halfway through, and smart scheduling that uses idle GPU time for background work.

The real benefit is consolidation and the gains that come from it. Instead of managing separate ETL pipelines, feature stores, and orchestration, you write transformations once and they live with your data. When you need to add a new embedding model or change how you process text, you modify the transformation and it backfills automatically. Debugging is easier because everything is queryable. Experiments are faster because you're not rebuilding pipelines. You deploy one system instead of coordinating multiple services.

Our Take

Good infrastructure gets out of your way and lets you spend time building features, not managing databases. LanceDB does that for us.

If you're building AI systems and tired of juggling multiple databases, LanceDB's Multimodal Lakehouse is worth checking out.