Building Engine-Agnostic Data Stacks

Adrian Brudaru,

Adrian Brudaru,

Co-Founder & CDO

The Multi-Engine problem

Most data teams end up with a mixed stack: Spark for large-scale processing, DuckDB for fast local analytics, maybe Snowflake for business intelligence. Each tool is great at what it does, but they don't play well together.

You know the drill - copy data between systems, rewrite the same logic in different syntaxes, get locked into vendor ecosystems. We had engines that couldn't coordinate, so we built workarounds that created more problems.

Iceberg started solving this by letting engines safely share the same tables. But that's only half the solution - your data might be portable, but your code still isn't.

Now we're seeing the other half emerge: tools that let you write analytical code once and run it anywhere. Combined with Iceberg, this creates something genuinely useful - both your data and your business logic become decoupled from specific compute engines.

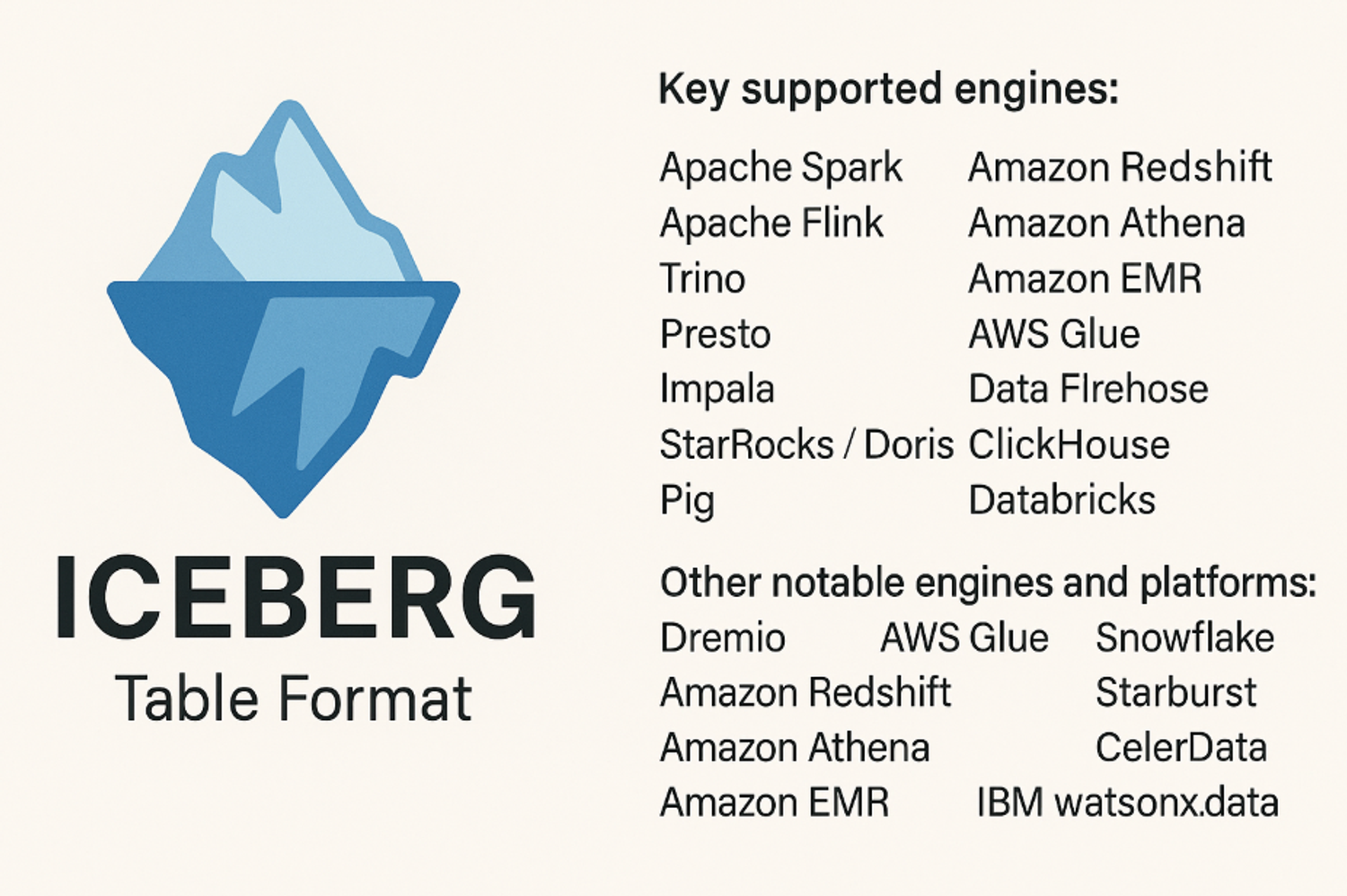

Iceberg: Decoupling storage

Iceberg solved the first half by creating reliable shared storage. Before Iceberg, you couldn't safely have multiple engines reading and writing the same data simultaneously. Ryan Blue calls this a "foundational change" because "data warehouse storage had never been reliably shared."

Iceberg brought database fundamentals to data lakes: ACID transactions, schema evolution, time travel, and multi-engine coordination. Suddenly, Spark, Trino, DuckDB, and others could safely share the same tables.

But Iceberg alone only solves half the problem. Your data can live anywhere, but your code is still tied to specific engines.

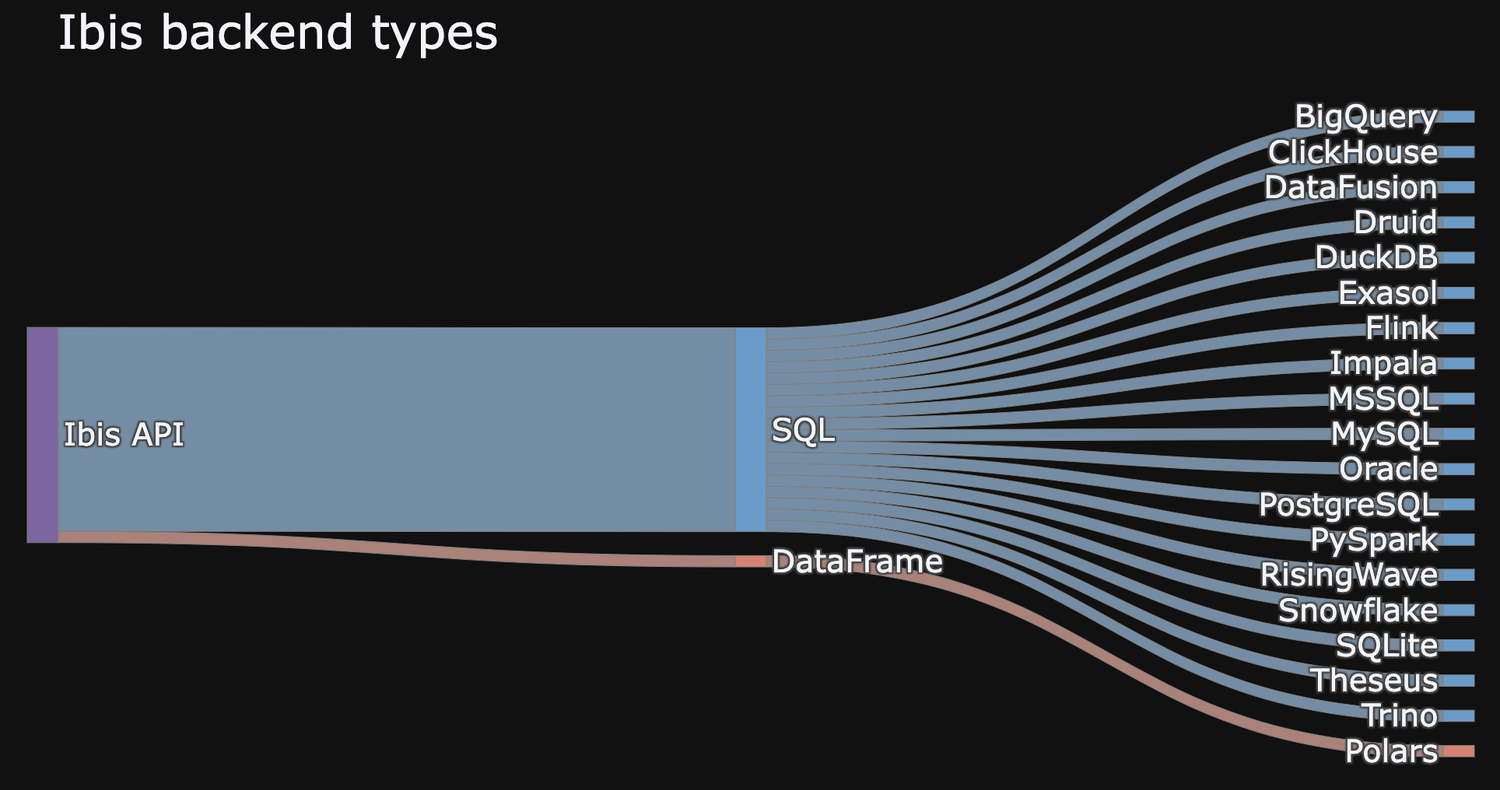

Ibis: Portable Code

This is where tools like Ibis become useful. Ibis provides engine-agnostic analytical code: write your transformations once, and they execute on DuckDB, BigQuery, Snowflake, Spark, or any other supported backend without modification.

Combined with Iceberg, you get something remarkable: both your data and your business logic become decoupled from the compute.

Why this is a good thing

When you're not locked into a single engine, you can make decisions based on what actually works best for each job. Need fast local development? Use DuckDB. Large batch processing? Spark. Sharing results with business users? Whatever they already have access to. The bigger benefit is that you stop spending time on integration plumbing and start spending it on the actual work. Less time rewriting queries, less time copying data, less time debugging vendor-specific quirks. Major vendors are adding Iceberg support because they have to - customers want the flexibility.

Tools like Ibis are expanding backend coverage. The ecosystem is moving toward portability because that's what people actually need. This is about reducing the tax you pay for using multiple tools well which is a problem worth solving because multiple tools do make sense for multiple use cases.

Explore with us how to make this future reality

Try our OSS features or let us know if you want to try dlt+ early access.