Dumpster diving for data: The MongoDB experience

Adrian Brudaru,

Adrian Brudaru,

Co-Founder & CDO

💡 TIL: BSON stands for binary JSON, not for BS Object Notation

What will you learn from this article?

The scope of this article is to look at and discuss the process of extracting data from MongoDB and making it available in a SQL store. We will focus on the difficulties around ingesting the data into a SQL database, while we will look at MongoDB only from a source perspective.

The focus is on the data in its different states, not the underlying technologies.

Why the harsh title?

The title may sound harsh, but it accurately reflects the challenges often encountered when dealing with MongoDB.

Also referred to as /dev/null, Mongo offers a flexible schema and document-based storage can lead to data inconsistencies and complexities, resembling a metaphorical "dumpster" where data might be scattered and difficult to organise.

Analytical consumers of MongoDB will be forced to invest extra effort in data modelling, schema design, and querying optimisation to ensure data quality and retrieval efficiency.

Is this a common problem?

It's inevitable. An analytical system has multiple requirements that force us to move data from places such as MongoDB to a SQL store.

Let’s look at those requirements:

- Business User access: Most data is used by business users to improve operations. Business users access data via dashboards, or pivot-table like interfaces that enable them to do custom aggregations. The tooling that exists to do this is created for SQL, as a place where relational queries can be executed.

- Ecosystem of integrations and tools: In analytics, having a diverse ecosystem of integrations and tools is crucial. SQL databases seamlessly fit into this ecosystem, offering compatibility with a wide array of data warehousing, data integration, and data governance tools. This comprehensive ecosystem enhances the analytics infrastructure, ensuring that data can be efficiently managed, transformed, and accessed by various stakeholders.

- Standardization for consistency: Maintaining data consistency is paramount in analytics. SQL's widespread adoption and standardized query language enable analysts and data professionals to work with data consistently across different systems and platforms. This standardization ensures that data is interpreted and manipulated uniformly, reducing the risk of errors and discrepancies in analytical processes.

- Data transformation & modelling capabilities: Effective data transformation and modelling are prerequisites for meaningful analytics. SQL provides a robust toolkit for these tasks, enabling data professionals to perform complex operations such as joins, filtering, aggregation, and intricate calculations. These capabilities are essential for preparing raw data into structured formats that can be readily used for in-depth analysis, reporting, and decision-making in the analytics domain.

So, after looking at what is needed for analytics, it becomes clear that going off the beaten path will lead to some pretty gnarly limitations and outcomes.

Mongo in particular: BSON vs JSON

How is Mongo different from semi-structure data like JSON, and is MongoDB particularly hard to ingest from?

BSON is for performance, JSON is for transmission.

The differences stem from the fact that MongoDB uses BSON under the hood, as opposed to JSON. BSON is a binary object notation optimised for performance, while JSON is a standard interchange format.

Similarly, Mongo also supports custom and more complex data types, such as geospatial, dates, regex, etc, that JSON does not. Additionally, BSON supports character encodings. All these benefits enable MongoDB to be a faster and better database, but the cost is an additional hurdles that must be crossed before we can use this data elsewhere.

So how do you solve these issues? Well, hopefully your development team didn't go overboard, and you can just simply convert the BSON to JSON. If you are unlucky, you will need to create your own mappers that follow whatever your team did.

From JSON to DB

Once you have converted your Mongo BSON into JSON, you are able to use its wide support to have it ingested.

JSON enjoys widespread support across various data processing tools and systems, making it a versatile choice for data ingestion. With your data in JSON, you can seamlessly integrate it into your database, leveraging its compatibility to efficiently manage and analyze your information.

Cleaning and typing

Data typing is essential in ensuring data integrity. It involves assigning appropriate data types to JSON fields, like converting numerical values into integers or floats, representing dates as datetime types, and labeling text data as string data types. This step guarantees that the database accurately stores and processes information.

Do we unpack?

The choice between unpacking nested JSON into tables or keeping it as JSON depends on your specific needs. Unpacking enhances query performance, indexing, and data manipulation within relational databases. However, native JSON support in some databases can suffice for simpler scenarios, preserving the original hierarchical structure. Your decision should align with data analysis, retrieval requirements, and your chosen database's capabilities.

Simply put, if you plan to use the data, you should probably unpack it to benefit from what relational dbs have to offer. But if you simply need to store and retrieve the JSON, do not convert it.

Querying unpacked data is cheaper and more robust than maintaining wet json_extract() code

Unpacking nested JSON into separate tables within a relational database is essential for robustness and query efficiency. Relational databases are optimized for tabular data and typed columns, making it challenging and error prone to handle complex nested structures directly.

By breaking down nested JSON into separate tables and establishing relationships through foreign keys, the data becomes more structured, ensuring robust data management and enhancing query efficiency. This simplification streamlines data retrieval and manipulation, aligning it with standard SQL operations for efficient and effective use.

Start using dlt to load Mongo to SQL today

To help with the challenges of loading Mongo data, we created a dlt source that reads your Mongo collections and throws flat SQL tables on the other side.

The benefit of using dlt is that you get flat tables in your SQL database that adapt to match the Mongo schema.

Here's a code explanation of how it works under the hood:

- It grabs data from Mongo and turns it into JSON.

- From JSON, dlt leverages schema inference and evolution to make sense of the data. Here is an example of how this nested data could look:

{

"id": 1,

"name": "Alice",

"job": {

"company": "ScaleVector",

"title": "Data Scientist"

},

"children": [

{

"id": 1,

"name": "Eve"

},

{

"id": 2,

"name": "Wendy"

}

]

}- We can load the data to a supported destination declaratively:

import dlt

pipeline = dlt.pipeline(

pipeline_name='from_json',

destination='duckdb',

dataset_name='mydata',

full_refresh=True,

)

# dlt works with lists of dicts, so wrap data to the list

load_info = pipeline.run([data], table_name="json_data")

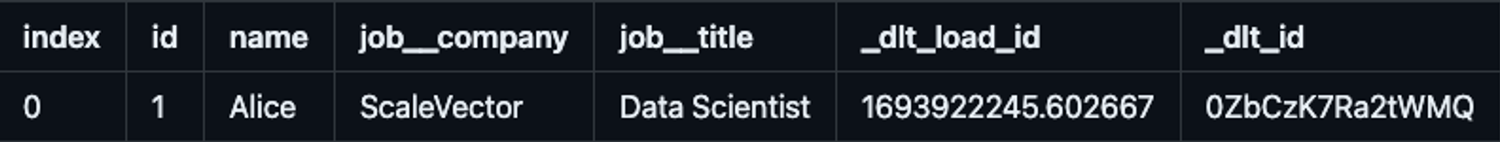

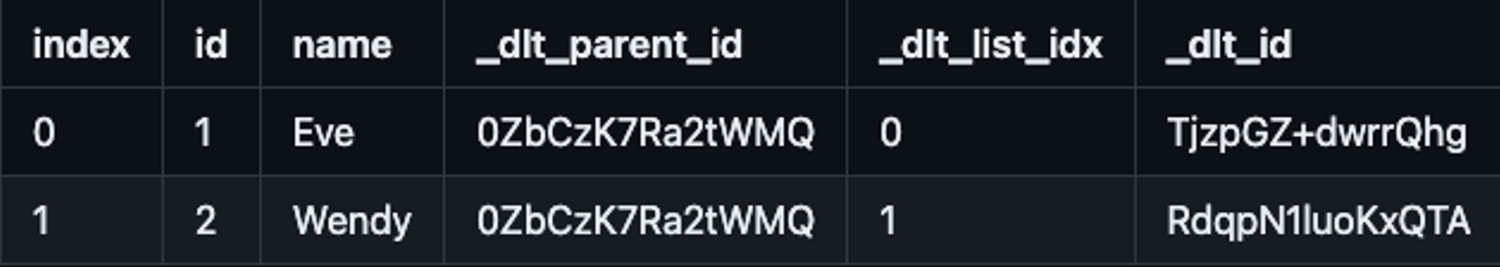

print(load_info)- Now we can use the data, these are two tables:

- Note that the original JSON got unpacked into tables that are now joinable via generated keys

child._dlt_parent_id = parent._dlt_id.

Read more about it here: Mongo verified source.

What are you waiting for?

- Dive into our Getting Started.

Join the ⭐Slack Community⭐ for discussion and help!