Are you moving the right data? Write. Audit. Publish. (WAP)

Aman Gupta,

Aman Gupta,

Data Engineer

The Write. Audit. Publish. (WAP) framework brings discipline from software engineering: write in isolation, audit for correctness, quality, and compliance, publish with confidence. But can data engineering really follow suit?

Absolutely.

WAP in the data workflows

1️⃣ Write → Controlled data ingestion

Load data into an isolated staging area, inaccessible to downstream systems.

2️⃣ Audit → Enforce automated quality and compliance checks

Enforce schema and data quality checks before data goes live. Define data contracts and validation rules to prevent downstream breakage.

3️⃣ Publish → Deliver validated, trustworthy data

Only audited data reaches production, ensuring downstream systems see verified results.

This isn’t theory. It’s how data teams prevent breakages and catch errors upstream before they hit production.

Experts like Julien Hurault push WAP (Write-Audit-Publish) pattern, and he’s right too. Most data workflows look like they follow it. You run dbt run, then dbt test. But by then, the data is already in production. If something breaks, you're cleaning up after the fact. Julien’s approach is simple. Materialize data in a temporary environment, run your tests there, and publish only when everything checks out. This way, you catch problems upstream instead of when it’s too late.

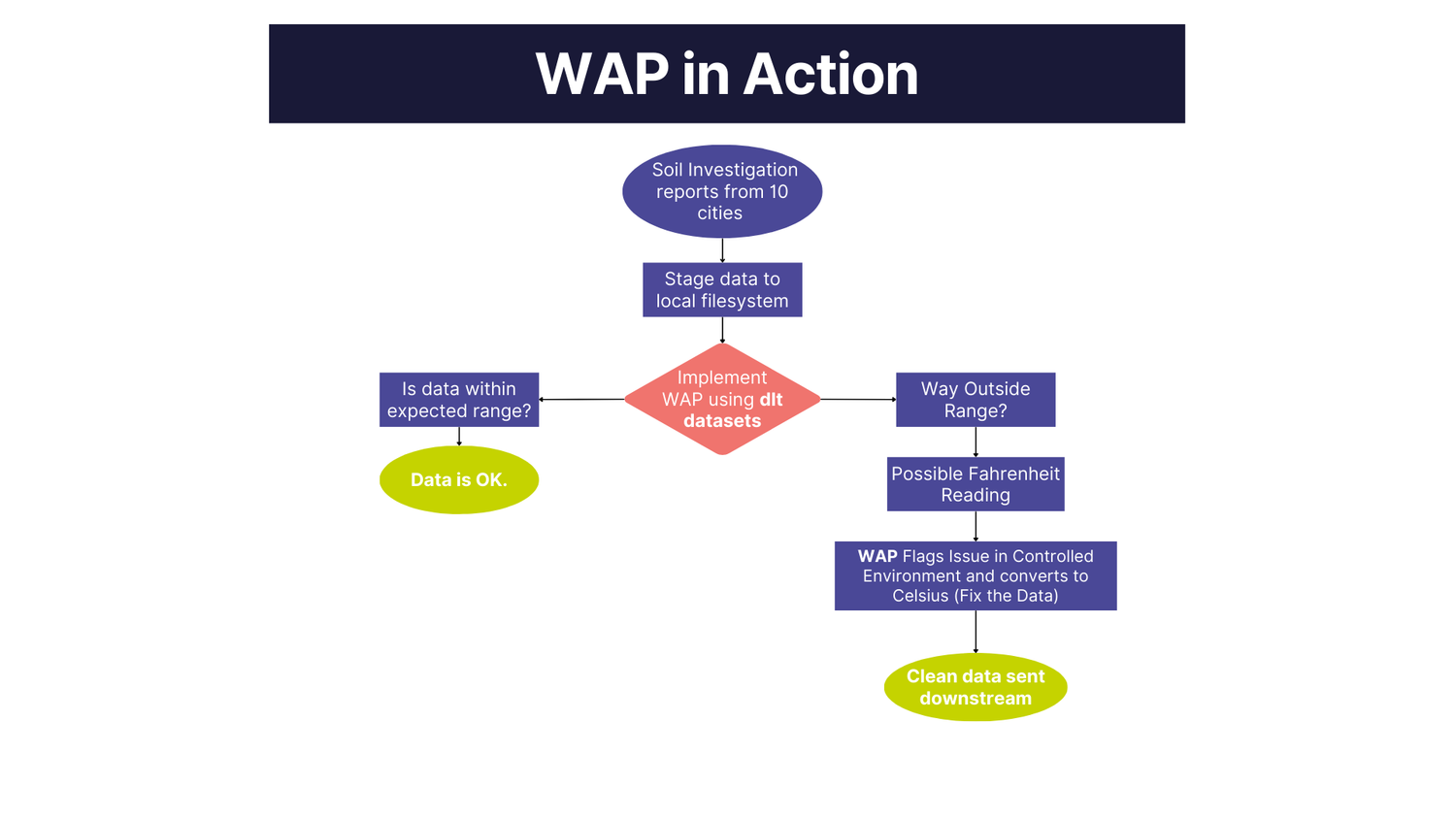

To break this down, I’ve put together a quick workflow using a hypothetical scenario.

Let’s say we have soil investigation reports from ten cities where soil temperatures typically range between 9 and 12°C. Now, if a reading suddenly falls way outside that range, there’s a good chance it was recorded in Fahrenheit instead. That’s a classic data quality issue, but one that WAP can easily solve. By testing the data in a controlled environment first, we can catch these errors early and convert them before they cause problems downstream. Here’s how:

Here’s an example code:

0. Load the necessary dependencies

!pip install dlt[filesystem] pyarrow_hotfix --quiet1. Load the soil report data into the staging area; for this, we'll utilize the filesystem.

import dlt

import pandas as pd

import os

# 1️⃣ WRITE: Load raw data to filesystem

write_pipeline = dlt.pipeline(

pipeline_name='soil_data_raw',

destination='filesystem',

dataset_name='raw_data'

)

# Sample data source with potential quality issues

# Some temperatures are in Fahrenheit (outside 9-12°C range)

data = [

{"city": "New York", "temperature": 10.5},

{"city": "Chicago", "temperature": 11.2},

{"city": "Los Angeles", "temperature": 50.0}, # Fahrenheit

{"city": "Houston", "temperature": 9.8},

{"city": "Phoenix", "temperature": 48.6} # Fahrenheit

]

# Load raw data to filesystem

load_info = write_pipeline.run(data, table_name="soil_investigation_report")

print("Raw data written to filesystem")

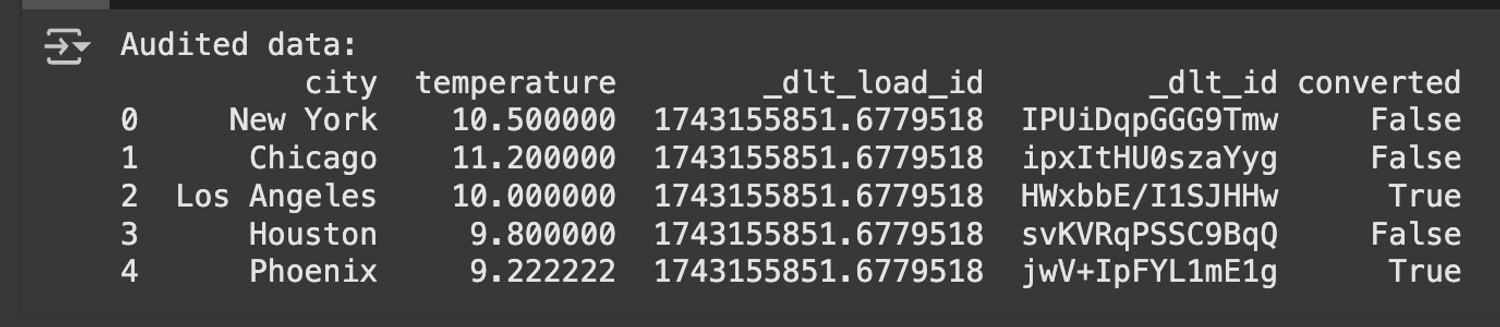

2. Utilize dlt datasets to audit the data and convert any outliers from Fahrenheit to Celsius.

# 2️⃣ AUDIT: Access the dataset and perform quality checks

# Get the readable dataset from the pipeline

dataset = write_pipeline.dataset()

# Access the table as a ReadableRelation

soil_relation = dataset.soil_investigation_report

# Load data into a DataFrame for auditing

df = soil_relation.df()

#print("Original data:")

#print(df)

# Perform data quality checks and transformations

for index, row in df.iterrows():

temp = row['temperature']

# Convert temperatures outside normal range (9-12°C) from Fahrenheit to Celsius

if temp < 9 or temp > 12:

celsius = (temp - 32) * 5/9

df.at[index, 'temperature'] = celsius

df.at[index, 'converted'] = True

else:

df.at[index, 'converted'] = False

print("Audited data:")

print(df)

This is how audited data looks like:

3. Load the audited data to BigQuery.

# 3️⃣ PUBLISH: Load the audited data to BigQuery

bigquery_pipeline = dlt.pipeline(

pipeline_name='soil_data_production',

destination='filesystem',

dataset_name='soil_data'

)

publish_info = bigquery_pipeline.run(df, table_name= "validated_soil_reports")

print("Data published to filesystem successfully!")The previous example showed a simple way to apply the WAP pattern with datasets. But that’s just one approach; there are plenty of other ways to make WAP work for you, such as:

- Implement schema contracts to establish rules that define your data structure and track how your schema evolves.

- Conduct data quality assessments, such as checking for value ranges, null values, and consistency rules.

- Run unit tests on your code. Check each function separately, like converting Fahrenheit to Celsius.

- Run integration tests to make sure all parts of your data pipeline work well together.

Conclusion

WAP isn’t just a nice-to-have. It keeps bad data from sneaking into production.

Curious how this looks end to end?

WAP is the habit. The data quality lifecycle is the habitat.

Read more about the data quality lifecycle here.

Questions? Connect with us on Slack.