Why Iceberg + Python is the Future of Open Data Lakes

Adrian Brudaru,

Adrian Brudaru,

Co-Founder & CDO

Why Data Engineers Love Apache Iceberg with Python

If you’ve spent any time in the data engineering community, you’ve probably noticed something: people don’t just use Apache Iceberg: they rally around it. They talk about it like it’s a revolution, not just a technology.

And it kind of is.

Iceberg takes everything that made data lakes painful and fixes them. You get ACID transactions, schema evolution that actually works, and a table format that doesn’t lock you into a single vendor.

And it’s really open. Open standard, open ecosystem, open choice of engines.

That’s why companies like Netflix, Apple, and Adobe bet on Iceberg early. It’s why Trino, Snowflake, and even BigQuery now support it.

So what makes Iceberg different? And more importantly, how do you start using it without ripping up your entire stack? Let’s get into it.

Why Apache Iceberg is Inevitable

For years, data was trapped in business tools, rigid warehouses, slow ETL, and SQL-first platforms built for reporting, not innovation. Meanwhile, data science broke free.

First, machine learning went Python-first and composable: pick your libraries, swap in new models, run anywhere. Then AI took it further, demanding scalable, flexible compute that could pull from any source, retrain on fresh data, and adapt in real time.

Now, the rest of the data industry has to catch up. Legacy table formats weren’t built for this world, but Iceberg is. Here are some reasons why it's needed:

- Works across engines: Trino, Snowflake, DuckDB, Spark. No silos, no lock-in.

- AI and analytics stay consistent with ACID transactions and time-travel.

- Schema evolution is painless: no rewrites, no broken pipelines.

- Compute and storage are fully decoupled: query the same data from anywhere.

ML and AI forced data to evolve. The old ways don’t scale. Composable, open, and interoperable wins, just like it always has.

Pythonic Composability Transformed AI, And Is Now Reshaping Data Engineering

Machine learning and AI moved fast because they were open. Researchers built on shared knowledge, swapped out models, and refined frameworks like TensorFlow, PyTorch, and Hugging Face. Each improvement stacked on the last, accelerating progress. That’s how we went from simple classifiers to LLMs in just a few years.

Data engineering, by contrast, was stuck. Rigid architectures, vendor lock-in, and Spark whether you liked it or not. Warehouses were built for dashboards, data lakes were bolted onto Hive, and Python teams had to navigate Java-heavy ecosystems that never really fit. Progress moved at the speed of enterprise roadmaps, not research.

Now, the same forces that made AI composable, iterative, and fast-moving are reshaping data infrastructure:

- DeepSeek’s Smallpond combines DuckDB and 3FS for AI-ready data processing.

- Iceberg acts as the open table layer across Trino, Snowflake, and Spark, eliminating silos.

- dlt (data load tool) simplifies pythonic ETL into Iceberg with a declarative, lightweight approach.

The Machine learning and AI space thrived because no one vendor was able to hold it back. Now, that innovation is here to swallow data engineering. The data stacks will have to either be modernised, or remain unusable in the LLM age.

AI’s Cognitive Workflows Demand Efficient, Structured Data: Iceberg Makes It Possible

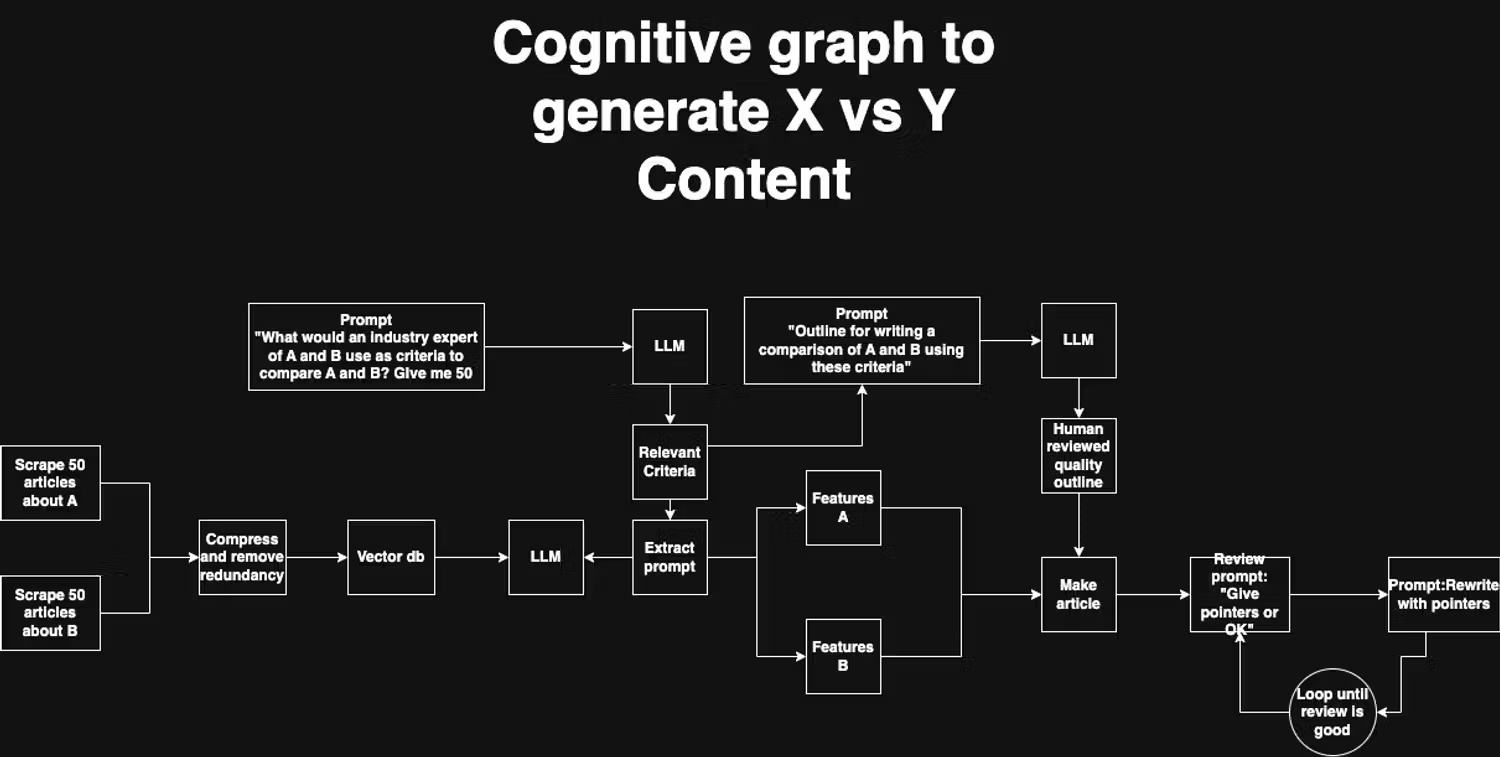

AI systems, especially LLMs, don’t just consume data: they loop, cross-reference, and consolidate information constantly. This mirrors how the human brain structures memories, using past knowledge to reason, predict, and correct itself.

These recursive processes require fast, reliable access to structured data, not just vectorized embeddings - as ultimately demonstrated by DeepSeek.

That’s where Iceberg changes the game. While unstructured data powers AI creativity, structured data is critical for truth-checking and logical consistency. It’s cheaper, faster, and doesn’t require vectorisation for retrieval, making it the natural choice for high-volume cross-checking and validation (imagine test driven development).

Iceberg enables this by:

- Providing a structured, versioned memory with snapshot isolation and time travel, ensuring AI systems retrieve consistent, historical data for reproducibility and reinforcement learning.

- Decoupling compute from storage, allowing AI workloads to run on lightweight, cost-efficient engines like DuckDB and Trino instead of vendor-locked, GPU-heavy clusters.

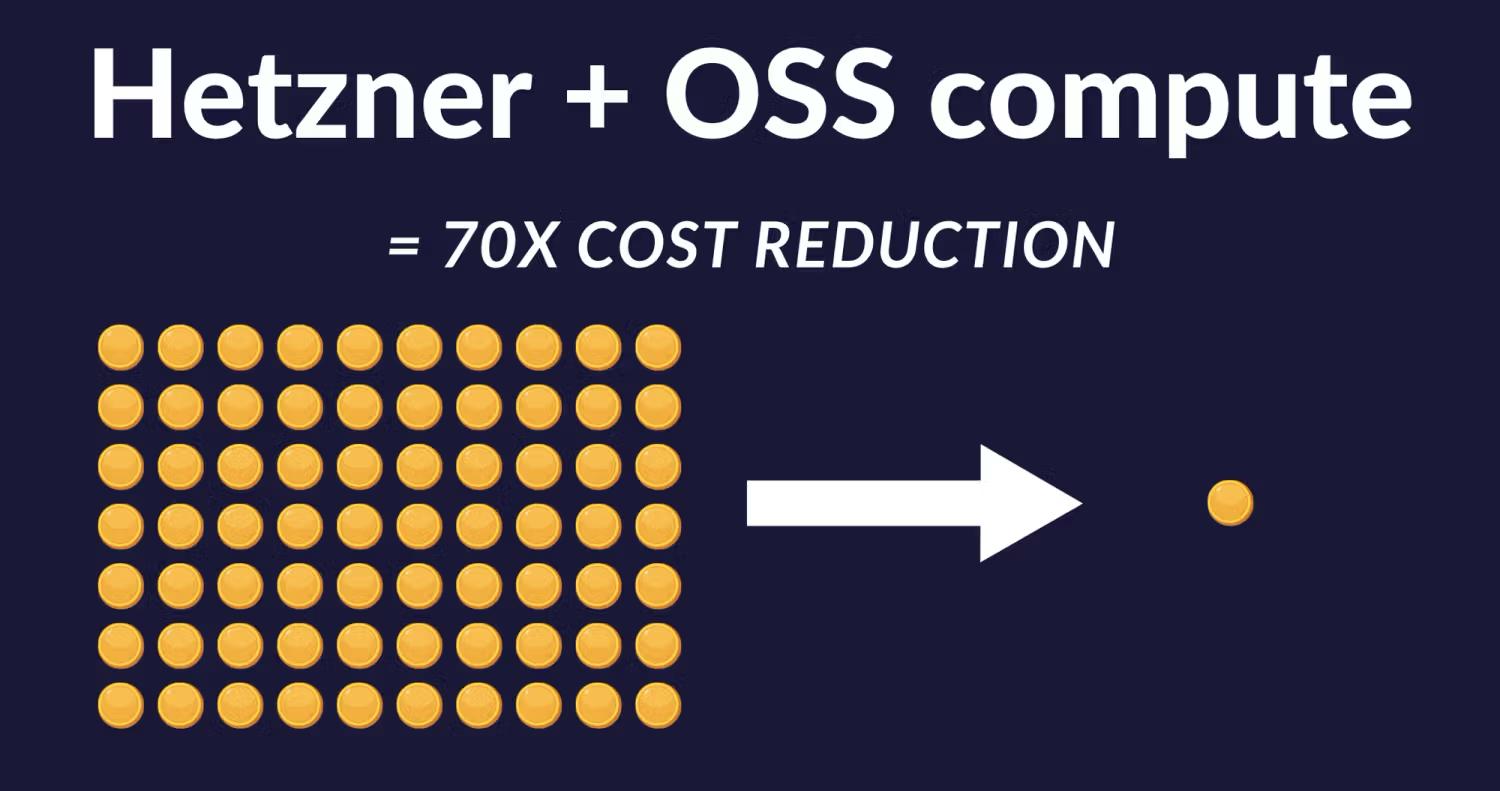

The Hidden Compute Markup Problem: You’re paying 70x more than you could

As AI usage scales 100x, inefficiencies add up fast, not just in data access but in compute costs.

Cloud vendors already charge a 9-14x markup over bare-metal providers like Hetzner. Layer a managed database service on top, like Snowflake or Databricks, and you’re paying another 4-5x on top of that meaning your AI workloads are running at 35-70x the actual compute cost. Now this is fine for "cloud services" that enable an expensive human to manage their work, but AI doesn't care and just wants compute.

Iceberg gives you a way out. By keeping compute and storage separate, you can choose cost-efficient engines instead of overpaying for managed services. AI can finally move beyond mere data consumption to structured cognitive workflows—where insights are scalable, repeatable, and cost-effective at any scale.

Adopt Iceberg Without the Pain: Composable, incremental

Legacy data lakes were never designed to evolve. That’s why migrations used to mean a painful, high-risk overhaul.

But Iceberg with Python doesn’t force a full rip-and-replace migration - it lets you extend what you already have, piece by piece. And with dlt, adopting Iceberg is fully modular, incremental, and pluggable, so you can start using it without disrupting your existing workflows.

You don’t have to change everything at once. Pick your entry point:

- If you’re already using dlt file destination, simply change the format to iceberg.

- If you’re already using dlt but not files, switch to staging + iceberg + catalog destinations

- Already in python? Plug dlt at the end of your existing Python pipelines, let your current processes run as usual, and simply add a final step that converts the output to Iceberg.

- Not in python? Migrate incrementally, pipeline by pipeline: rewrite your workflows in dlt one at a time, gradually replacing brittle pipelines with a simpler, Iceberg-native approach. This is easier than you might think.

We’re already seeing this in action with Athena + S3. dlt lets you write Iceberg tables directly to S3 and register them in the catalog automatically. From the user’s perspective, nothing changes. They keep querying as usual, but under the hood, they’re now running on Iceberg. No painful migration, no downtime, just a seamless shift to a more powerful data layer.

And this is just the beginning.

- dlt+ is expanding support for all major catalogs, making Iceberg even easier to adopt across diverse data ecosystems.

- OSS users can already enjoy Iceberg in a pure Python, headless setup, without needing a heavyweight metastore. And if you're on AWS Athena, we support registering tables there via the glue catalog.

This is why Iceberg isn’t just ‘the future’. It’s already happening because you don’t have to ‘migrate’ in the traditional sense. You just start using Iceberg, one table, one workload at a time, until one day, you realize your data lake has frozen over :).

The Bottom Line: Iceberg Is already here, start using it today!

The shift to Iceberg isn’t some distant future - it’s happening now. The best part? You don’t need a complex migration plan to join them.

With Iceberg and dlt, you can start small:

OSS:

- Write Iceberg tables to S3 and keep querying with Athena: no disruption, just better performance.

- Ingest data with python into headless (catalog-free) data lakes with dlt