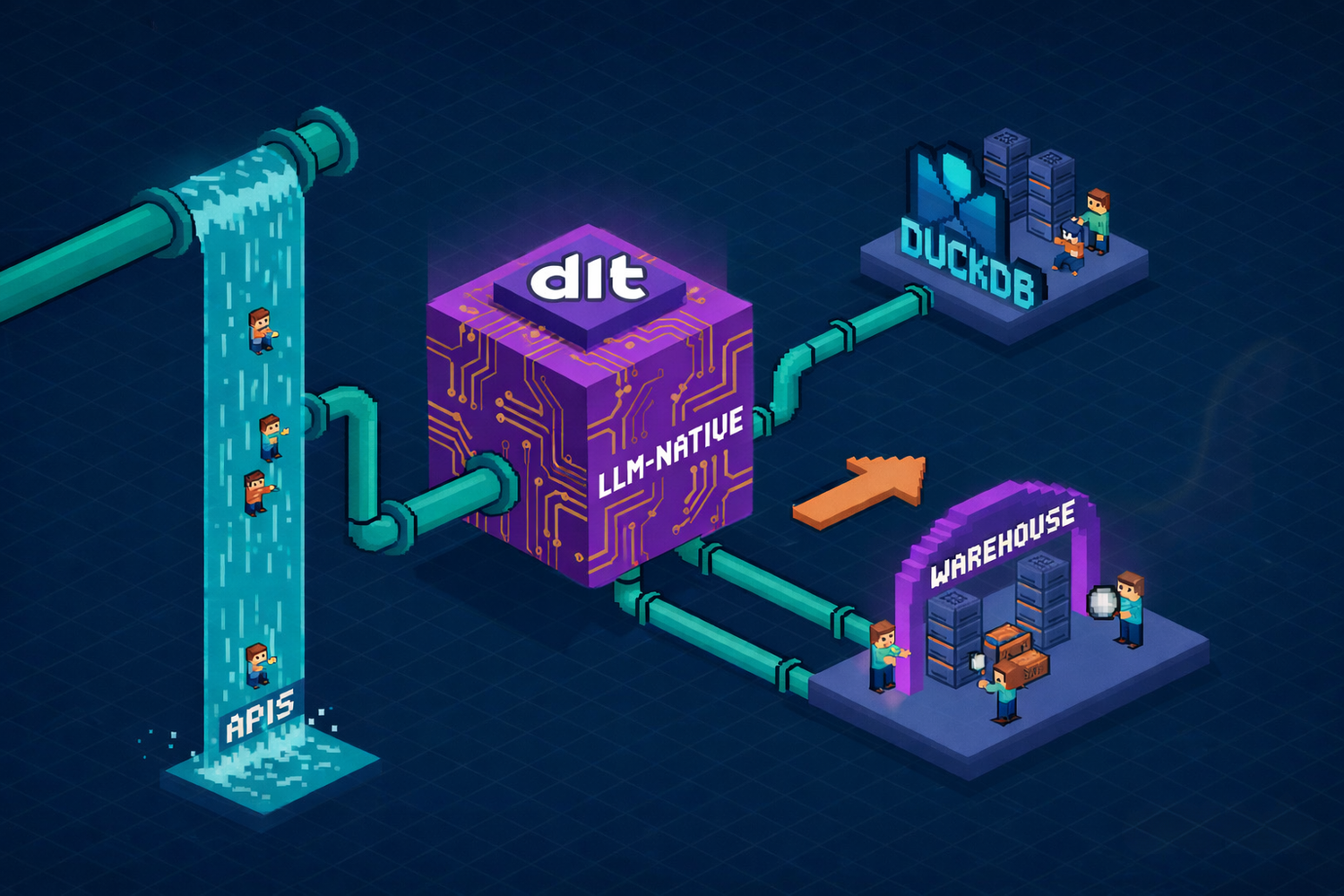

LLM-native EL workshop on Data Talks Club

Remus Molnar,

Remus Molnar,

communication

About This Article

This post promotes the DataTalks.Club workshop on AI-assisted data ingestion with dlt, led by Aashish Nair (Data Engineer at dltHub and creator of the dlt deployment course). The workshop is supported by the broader data community including Adrian Brudaru (dltHub’s CDO), whose essays on data quality and builder philosophy inform the approach discussed here.

Event Details:

📅 Feb 17, 16:30 CET (Online via YouTube)

🎓 Hands-on and code-driven

💻 Suitable for junior to experienced data engineers

🆓 Free to attend

🔗 Register at luma.com/hzis1yzp

The Shift in Data Engineering

For over a decade, Pandas was the gold standard. We wrote manual scripts to clean, parse, and ingest our data. Data engineering meant writing parsers by hand, debugging edge cases, and carefully stitching together ingestion logic.

Recently, Wes McKinney, the creator of pandas, publicly reflected on a change in his own workflow. After years of skepticism, he admitted that after seeing the capabilities of frontier AI models, he went from being an AI skeptic to a full adopter.

He’s no longer focused on manually writing every parser. Instead, he’s building tools like Msgvault, which uses DuckDB and AI protocols to ingest and query lifetime data archives, and Roborev, an autonomous AI agent designed to review the massive amounts of code that other AI agents are writing for him.

That evolution matters. When the creator of pandas shifts from writing individual ingestion scripts to designing systems that orchestrate intelligent workflows, it signals something bigger than a tooling upgrade. It signals a workflow change.

The future of data engineering isn’t about hand-writing every ingestion function. It’s about designing reliable systems where automation handles the repetitive work, and engineers focus on correctness, validation, and architecture.

That’s exactly what this workshop is about. We’re not teaching you how to manually write parsers. We’re teaching you how to design modern ingestion systems where AI sits at the front of the pipeline, handles the mechanical work, and you stay in control of structure, validation, and production safety.

What You Will Learn

We'll Build Together

- Scaffold a pipeline for a real API (GitHub is easiest, but bring your own)

- Generate the connector config with your IDE agent (Cursor, Continue, or similar)

- Debug quickly using errors + tooling (paste errors, get fixes, iterate fast)

- Validate loads in the dashboard (schemas, metadata, traces)

- Build a tiny report (one metric, one dimension - commits per month, top contributors, etc.)

It’s a practical workflow for building pipelines much faster while keeping errors small, visible, and easy to fix.

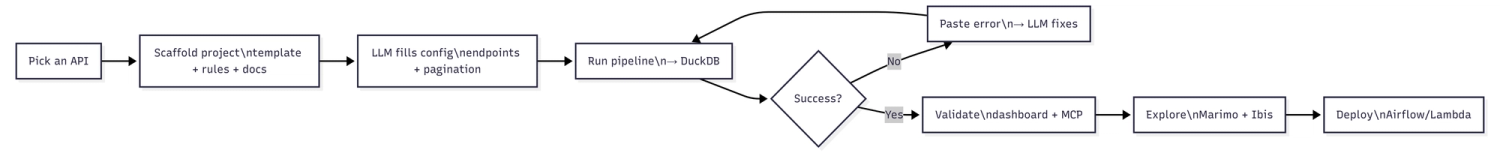

The pattern is simple: Constraints + inspection loops (with the LLM doing the mechanical work, and you keeping control):

- Start from a scaffold (templates + documentation context)

- Generate config-driven pipeline code (not freestyle Python)

- Run → hit an error → paste the error back → fix quickly

- Validate using a dashboard + metadata

- Explore in a notebook with portable queries

In an LLM-driven world, data is plutonium: incredibly powerful, but toxic when mishandled. You need containment at every step.

Because the ingestion layer is rarely the real problem. The hard parts are the same as they’ve always been:

- Pagination edge cases

- Rate limits and retries

- Schema drift and nested JSON

- “It ran” vs. “it loaded the right thing”

- Data quality and governance

So the workflow isn’t “type prompt → ship to prod.” It’s a tight, observable loop where every run is inspectable, and every assumption is testable.

A Concrete Case Study: GitHub Commits in Under 10 Minutes

Let's keep it practical. Here's the business question:

"How many commits per month in our repo, and who's contributing the most?"

Source: GitHub API

Destination: DuckDB (local first, fast iteration)

Tools: dlt Workspace + Cursor + Marimo + Ibis

Step 1: Scaffold the Pipeline (Context Over Blank Slates)

Instead of opening a blank pipeline.py and writing everything from scratch, you initialize from a scaffold:

uvx dlt init github duckdb

What you get:

- A pipeline script with placeholders (not blank, not complete)

- IDE rules that teach your LLM about dlt (pagination, error patterns, config structure)

- Source-specific docs in LLM-readable YAML format

- An MCP server so your agent can query pipeline metadata instead of hallucinating

This changes what the model does:

- From: "write random code based on vibes"

- To: "fill in a structured template with guardrails"

The scaffolding includes documentation like github_docs.yaml the API reference in a format the LLM can consume directly. You don't even need to read the GitHub docs yourself.

Step 2: Prompt Like an Engineer (Be Specific)

Here's a good prompt pattern from the demo:

Use the GitHub source to:

- fetch commits and contributors

- owner: dlt-hub

- repo: dlt

- destination: DuckDB (local)

Let the agent fill in the config-driven source and pipeline blocks. The IDE rules guide it to handle pagination correctly, configure authentication, and structure the output.

Step 3: Run It, Expect Errors, Fix Fast (The Tight Loop)

Even with great context, models are non-deterministic. In the live demo, the first run errors on pagination configuration.

This is not a failure, its the workflow.

How to fix it:

- Copy the error message

- Paste it into the IDE chat

- Agent reads the error + the pagination rules

- Agent patches the config

- Rerun (usually succeeds)

Total time: ~60 seconds.

This is where skeptics should lean in: you're not trusting the model, you're using it to shorten debug cycles. You stay in control and review every change. The LLM is a very fast junior engineer, not an oracle.

If your prompt doesn’t produce a working config on the first try, that’s expected. In the workspace video demo, the pagination error appeared on run #1 and was resolved within a single “error → paste → fix” loop. Perfection on the first try doesn’t matter nearly as much as how quickly you can close the loop.

Step 4: Validate in the Dashboard (Turn "It Ran" Into "I See What It Did")

After the pipeline runs, open the validation dashboard:

dlt pipeline github_pipeline show

You can now inspect:

- Schemas for each loaded table (commits, contributors)

- Child tables created from nested JSON

- Run traces showing extraction → normalization → load

- Load metadata (timestamps, row counts, success/failures)

- Quick SQL previews of actual data

Or use the MCP server for natural language queries:

User: "What tables are available?"

MCP: "Commits (4,500 rows), Contributors (131 rows),

Commits__parents (nested child table)"

User: "When was data last loaded?"

MCP: "2025-02-13 14:23:18 UTC, completed successfully"

This is observability by default, no configuration needed.

Step 5: Explore with a Notebook + Portable Query Layer

Now the fun part: analysis.

Open a marimo notebook (reactive Python notebooks that are just .py files, no JSON blobs):

import dlt

import marimo as mo

import ibis

import altair as alt

# Connect to the pipeline

pipeline = dlt.attach(

pipeline_name="github_pipeline",

destination="duckdb"

)

con = pipeline.dataset_ibis

# Query in SQL or Python—your choice

commits_by_month = con.sql("""

SELECT

DATE_TRUNC('month', committed_date) as month,

COUNT(*) as commit_count

FROM commits

GROUP BY 1

ORDER BY 1

""")

# Or use Python syntax

commits_by_month = (

con.table("commits")

.group_by(ibis._.committed_date.truncate("M"))

.agg(commit_count=lambda t: t.count())

.order_by("committed_date")

)

# Visualize interactively

alt.Chart(commits_by_month).mark_line().encode(

x='month:T',

y='commit_count:Q'

)The magic of Ibis: write the query once, run it anywhere. DuckDB locally, Snowflake in production, BigQuery for a different team. Same code.

The Workflow in One Diagram

You Don't Trust LLMs. Good. Here's the Safety Checklist.

Skepticism is healthy and the answer isn’t blind automation, it’s governance through code.

Every change goes through pull requests, every schema modification is diffed, every deployment is logged, and audit trails are automatic. The Data Quality Lifecycle is built into dlt and you’re not sacrificing safety, you’re gaining control.

Contrary to the traditional view, data quality isn’t a single test or contract. As outlined in the Practical Data Quality Recipes with dlt, it’s a structured system spanning three checkpoints (in-flight, staging, destination) and five pillars (structural, semantic, uniqueness, privacy, operational).

That’s why this workflow includes validation at every step:

- The LLM generates code

- IDE rules constrain it

- You review the diff

- Tests run automatically

- The MCP server validates metadata

- The dashboard makes outcomes visible

You’re not delegating responsibility, you’re tightening the feedback loop.

This is speed without chaos.

The Builder stack inverts the old dependency model:

- Code as the interface (versioned, reviewable, portable)

- Constraints as the safety mechanism (scaffolds, rules, validation)

- Observability as default (metadata, traces, dashboards)

- Portability as insurance (DuckDB locally, any warehouse later)

When done right, LLMs don’t replace engineering discipline, they amplify it.

Optional Hackathon Challenge (Bring Your Own Pain)

Do you have an API you've been avoiding? Bring it:

- Annoying pagination logic

- Nested JSON that's a nightmare

- Rate limits that make you rage

- Schema that drifts in production

Comment below or in the workshop chat with:

- Your "annoying API"

- One metric you want to measure after loading (e.g., "daily active users by country")

We'll try to get it ingesting by the end of the session.

Register + Prep (2 Minutes)

Register (Luma):

Prep Checklist:

- Install dlt Workspace tooling:

pip install dlt

- Pick an API (GitHub is easiest for beginners)

- Decide on 1 business question you'll answer after loading

- (Optional) Set up Cursor or another AI-enabled IDE

What to Expect:

- Fully practical and code-driven (not a slide deck)

- You'll leave with a working ingestion repo

- You'll learn a repeatable workflow you can apply Monday morning

Take the Next Step

1. Join the Workshop (Feb 17)

Register now → and build your first AI-assisted pipeline in a safe, guided environment.

2. Try dlt Workspace Today

pip install dlt

dlt init <source> <destination>

Pick any source from the 10,100+ LLM-native scaffoldings available in the workspace, you can start small (GitHub, Sheets) or bring your own API.

3. Read the Deeper Essays

- The Builder - On reclaiming professional identity

- The Plutonium Protocol - On data quality in the LLM era

- Convergence – On how human judgment, structured context, and AI agents are merging into a single engineering workflow

- Autofilling the Boring Semantic Layer - On LLM-native workflows

4. Join the Community

The dlt Slack is where Builders share wins, debug together, and push boundaries.

5. Comment Your "Annoying API"

Planning to join the workshop? Comment below with:

- The API you've been avoiding (and why it's annoying)

- One metric you'd want to measure after loading it

We'll share pipeline prompts that work well and maybe tackle it live during the hackathon portion.

The "Modern Data Stack" fortress is crumbling. The shift to Builder-native tools is underway. And the engineers thriving in this era won't be the ones with the fanciest vendor stack, they'll be the ones who can ship fast, validate correctly, and iterate fearlessly.

See you at the workshop on Feb 17. 🚀

This article synthesizes insights from the dlt Workspace video tutorials, Adrian Brudaru's essays on data quality and builder culture. For the full technical details, watch the workspace workflow demo, 10-minute pipeline demo, and MCP server tutorial.