Highlights

- Democratizing data access: With dlt, an open-source Python library for data loading, all Harness teams can reduce bottlenecks, no longer need to wait for permission or data access, and easily generate new reports and ideas from data, helping spark innovation.

- Speed and security: A single senior data engineer at Harness could completely switch to using dlt for all core SaaS service pipelines. And, with dlt able to adapt to changing schemas, there’s future-proofing for when things change.

- Centralized and improved: Consolidating data into a single repository, ensuring reliability, accuracy and consistency through rigorous data cleaning and validation.

Data Stack

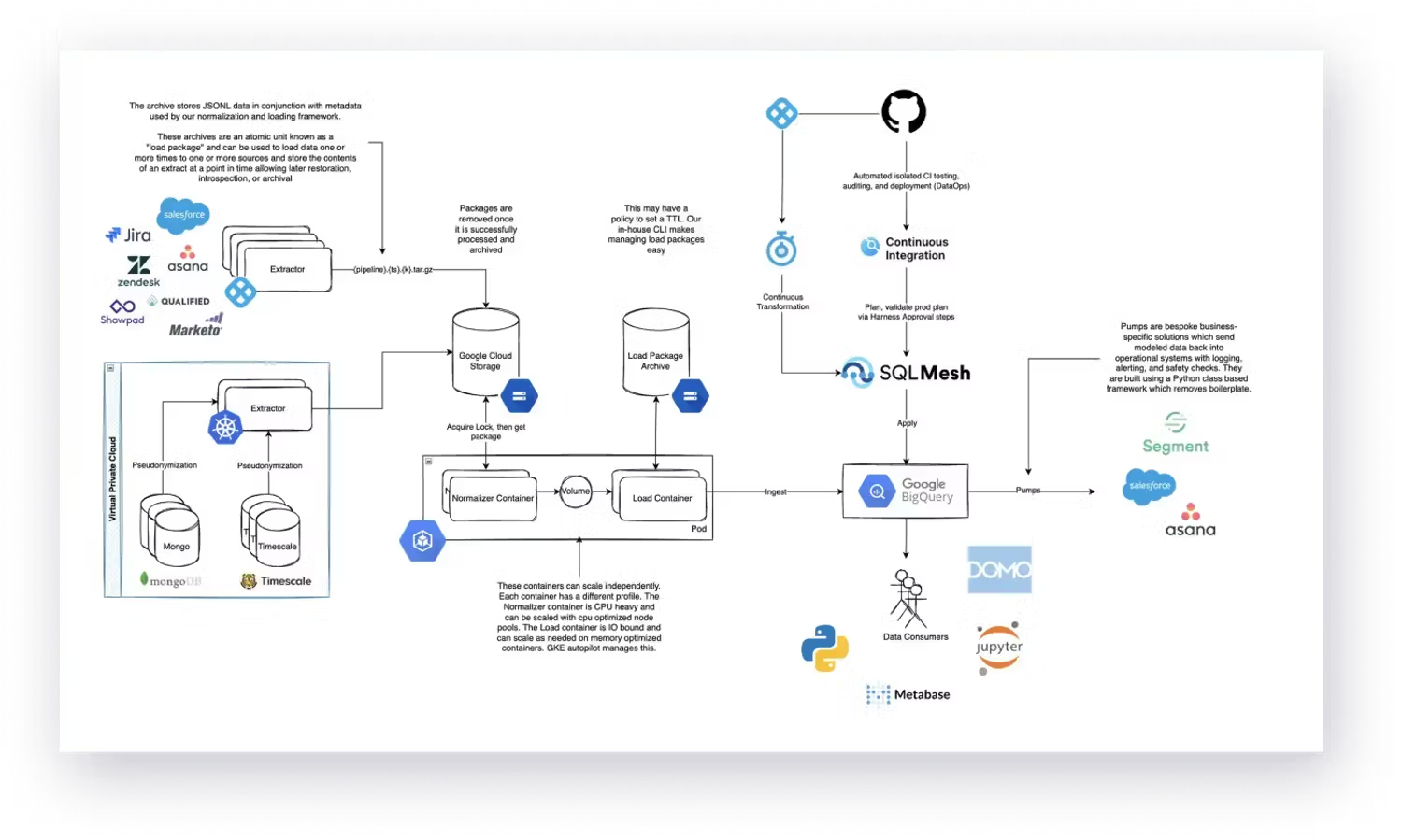

Data sources: 14 active sources including Salesforce and Zendesk; GitHub, Confluence, Jira, Asana; and production data from MongoDB and Timescale.

Destination: BigQuery

Orchestration: Harness.io

Transformation: SQLMesh

Challenge: Inefficient setup delays expansion

Harness offers various services, from DevOps and developer experience tools, to secure software delivery and cloud cost optimization.

The go-to-market team at Harness needs quality data to understand how its marketing funnels are working, the interest levels in each area of its products, and more.

Harness's existing data infrastructure was fragmented, with data scattered across various sources and systems. This siloed approach made accessing, understanding, and deriving value from the data difficult. Additionally, the company faced data quality, consistency, and timeliness challenges.

Challenges were mounting when managing and maintaining the data infrastructure. The existing setup, heavily reliant on Singer and Meltano, struggled to keep pace with the company's expanding data needs.

The unreliability of the Singer ecosystem, with many Taps for various data sources left unmaintained became a significant bottleneck. Data pipelines were prone to failures, leading to data inconsistencies and delays in insights, and the data engineering team hand-coded solutions. Additionally, data quality issues would arise, requiring additional data cleansing and transformation steps, often again needing hands-on fixes, which are both manual and error-prone.

To address these critical issues, Harness sought a more robust, efficient, and scalable solution.

From the very beginning, I'd built the entire data infrastructure myself and had full support to make any necessary changes as our business needs evolve. I discovered dlt and saw that it could solve all my problems with Singer schemas, as dlt doesn’t require upfront knowledge of data structures, unlike Singer Taps.

- remembers Alex Butler, Senior Data Engineer at Harness.

Alex’s mission to embrace dlt had full backing from his team for reworking how data is extracted, transformed and loaded. The goal was data democracy, aiming to greatly assist the go-to-market side of Harness, including sales and customer success, to be able to much more quickly and reliably understand how customers are acquired and create models for how Harness customers utilize their licenses from data.

The Solution: Starting afresh, proving dlt with SQLMesh

A staged approach was taken to move away from custom Singer Taps and targets and Meltano’s heavy dependencies via dlt, and later, SQLMesh was used to support the transition as well.

Alex from Harness first tested taking an Asana Singer Tap, piping that into dlt and checking the outcome. But running Singer Taps as dlt pipelines was cumbersome, and to simplify further, a full migration to dlt pipelines was started. First was Salesforce, translating the whole Salesforce Tap to just function generators with the dlt wrapper. This approach streamlined pipelines into single files with just a few lines. After fully migrating the Taps, he developed a custom object model to create a company-wide registry of sources and metadata.

This further enabled the creation of a custom CLI wrapper for dlt, known as Continuous Data Flow (a play on continuous integration and continuous delivery), which unified data pipeline management. Now, the company can run any source from a single interface without needing separate virtual environments.

dlt has enabled me to completely rewrite all of our core SaaS service pipelines in 2 weeks and have data pipelines in production with full confidence they will never break due to changing schemas. Furthermore, we completely removed reliance on disparate external, unreliable Singer components whilst maintaining a very light code footprint.

- Alexander Butler, Senior Data Engineer at Harness

Introducing dlt and SQLMesh together allowed Alex to build a modern data platform to replace its existing Singer and Meltano infrastructure. dlt's powerful data ingestion, transformation, and loading capabilities offered a significant improvement over Singer Taps, providing a more reliable and efficient way to extract data from diverse sources.

Explore the Harness solution in more detail in the blog post.

Results: Strengthening and streamlining infrastructure

Harness successfully transformed its data management landscape by removing old stumbling blocks and adopting a dlt and SQLMesh-based data platform.

Harness achieved the following:

- Centralized Data Repository: Consolidated data from various sources into a single, reliable repository, eliminating data silos and improving data accessibility.

- Improved Data Quality: Ensured data accuracy and consistency through rigorous data cleaning and validation processes, reducing the risk of errors in data-driven decisions.

- Accelerated Insights: Reduced the time it took to extract, transform, and load data, enabling faster and more informed decision-making.

- Enhanced Collaboration: Facilitated collaboration among data teams by providing a unified data platform, reducing the need for manual data integration and reconciliation.

- Reduced Operational Costs: Streamlined data management processes, reducing the time and resources required for data maintenance and troubleshooting.

- Increased Scalability: The new platform was designed to handle growing data volumes and complexity, ensuring that Harness could continue to scale its operations without compromising data quality or performance.

Future

In terms of next steps, Alex is planning an operational dashboard to monitor data pipeline health and column-level freshness within Harness automatically. By integrating dlt's load package metadata with SQLmesh’s column-level lineage, the exact origin of data in each column can be traced.

This setup will allow Harness to quickly identify issues and verify data freshness by linking specific dlt load packages to SQLmesh’s lineage data, creating a seamless end-to-end monitoring solution.

About Harness

Harness aims to enable every software engineering team in the world to deliver code reliably, efficiently and quickly to their users.