Data Platform Engineers: The Game-Changers of data teams

Adrian Brudaru,

Adrian Brudaru,

Co-Founder & CDO

The data product lifecycle - when do we bring in the platform builder?

Starting from the bottom

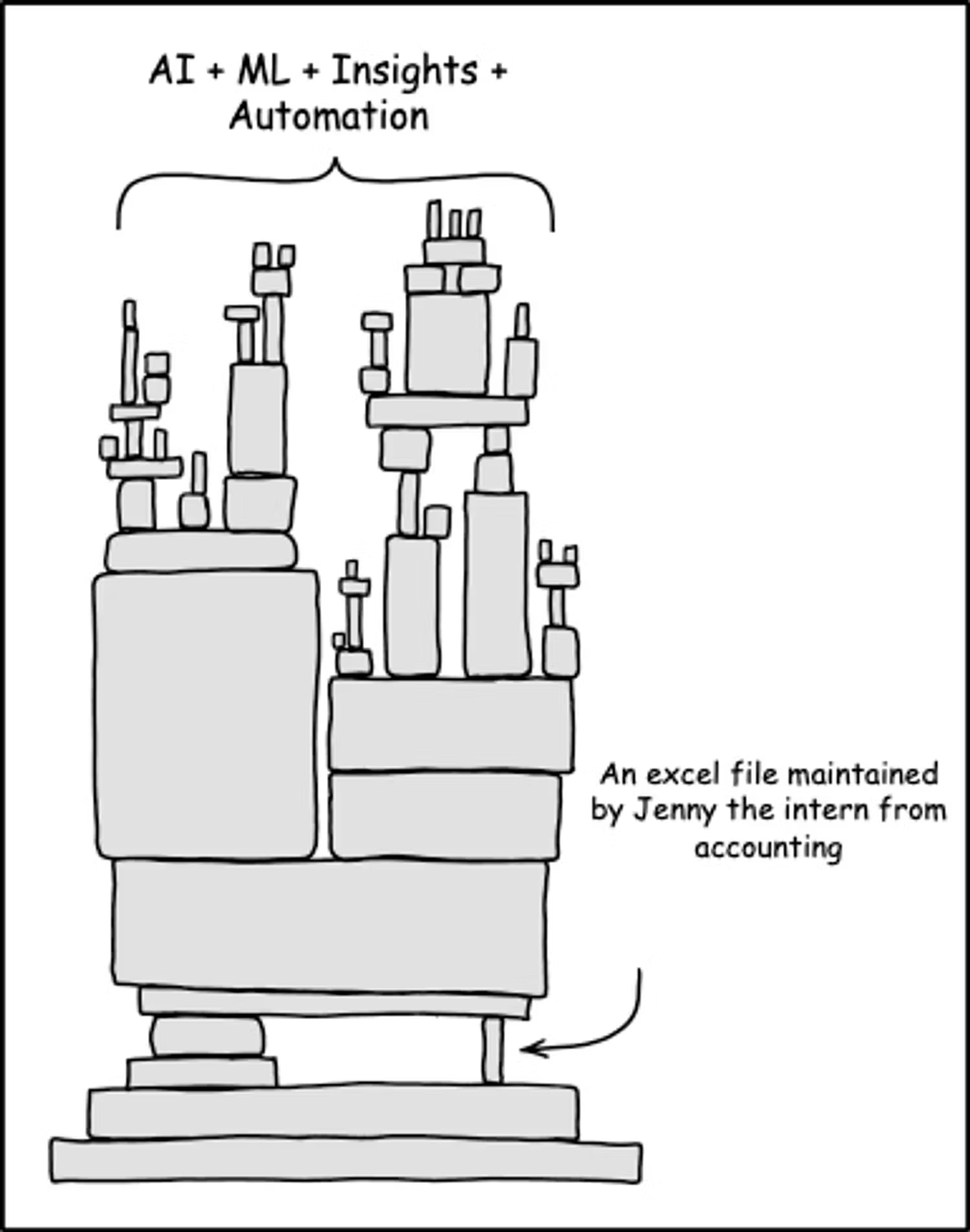

Companies that build their first data stack usually start with a small investment - so it is unlikely they start with a senior team and the vision or resources needed to build a platform. Due to this, it’s more likely that the team will start with something that works and go from there. The “something that works” is usually some ready-made connectors they can find in saas or online, or some D-I-WHY quick and dirty python pipelines.

This is far from a consistent, sustainable way to manage data pipelines or data flows, and simply pushes the problem into someone else’s yard.

Starting from the bottom, again - this time with the data platform engineer.

The way out of the “first time data setup” is usually not through. A system that was built for early stages of a company’s data needs was OK for those times, and it did great in capturing the requirements of what data needs to be delivered and can be used. But you can’t build reliable automations on swampy infrastructure.

As the demand and use cases of data increase, the need for a more sustainable, systematic approach becomes apparent.

This need gives rise to the development of integrated data platforms which aim to provide the means to deliver reliably every time, and not re-encounter the same issues on every implementation. These platforms integrate various functions: data ingestion, quality control, processing, and analysis, into a cohesive system that operates efficiently under a unified architecture.

The transition to such platforms represents a move from mere survival (managing day-to-day data challenges reactively) to thrival, where organizations can proactively harness their data for strategic advantage. This enables companies to be prepared for leveraging data at scale.

What is a Data Platform today, and how is it different from the ad hoc ETL setup?

A data platform is an integrated framework that consolidates various data management functionalities into a cohesive and streamlined system. This contrasts sharply with the ad hoc ETL (Extract, Transform, Load) setups that have traditionally been employed in many organizations.

Key differences include:

- Integration vs. Fragmentation: Where ad hoc ETL processes typically involve piecemeal tools and systems cobbled together as needed, a data platform offers a holistic approach. Everything is designed to work together from the ground up, reducing compatibility issues and streamlining workflows.

- Scalability: Ad hoc ETL setups can quickly become unwieldy as data volume and complexity grow. Data platforms, by design, are built to scale efficiently and effectively, accommodating growth without performance degradation.

- Decentralization & Technical access democratisation: Unlike the centralized nature of traditional ETL, which often leads to bottlenecks and gatekeeping, data platforms embrace a decentralized approach. This aligns with the principles of a data mesh, empowering domain-specific teams with the autonomy to manage their data, which enhances agility and speeds up decision-making.

- Data Democratization: Besides access, data platforms make governance a first class citizen, enabling compliant treatment of sensitive data and potentially managing access control from the very start. This way, data platforms facilitate easier access to data across an organization, promoting data democratization. This is a shift from ad hoc ETL setups where data access can be restrictive and siloed.

Why Build a Data Platform: Centralizing Tech, Decentralizing Business

In modern data-driven organizations, the complexity and pace of data operations require separating business dependencies from technological ones. This has given rise to the concept of Data Platform Engineering, which focuses on streamlining and automating the data lifecycle, much like traditional DevOps does for software development. The rationale for building a data platform often revolves around the need to centralize technological control while decentralizing business operations, enabling tailored, domain-specific data handling with uniform technical excellence.

Building a data platform with centralized technical control and decentralized business operations offers a balanced approach to managing the complexities of modern data ecosystems. This strategy not only enhances operational efficiency and data quality but also supports dynamic business needs, enabling organizations to leverage data more strategically and effectively in their decision-making processes.

Centralizing technological choices and governance

This doesn’t mean run everything in a monolithic monorepo or on a single db or orchestrator, but it means make technology choices for standards so systems can interface well. For example, you could allow for some choice options that play well together, or create an API for governance metadata that won’t let a dataset pass without it. This would empower teams to stay compliant while not running into bottlenecks.

Centralizing the technological aspects of data management, such as infrastructure, tooling, and data governance, on a unified data platform offers several advantages:

- Consistently reliable: A centralized tech stack ensures that all data operations are consistent and adhere to the same standards, protocols, and security measures.

- Efficiency: Centralized technology simplifies the management of data resources, reducing overhead and the potential for errors. It allows for better resource allocation, performance optimization, and maintenance.

- Scalability: Centralized systems are easier to scale, as they provide a clear framework for expanding data operations without the need for significant restructuring or the duplication of effort.

In the case of dlt, since a lot of metadata is inferred from the data and schemas are the starting point, this provides a plug and play metadata collection and reflection option.

Decentralizing business operations

While the technology stack is centralized, the business logic and data utilization are decentralized across various domains or business units within the organization.

This approach aligns with the principles of a data mesh, where data ownership is domain-oriented, empowering specific teams to manage and utilize their data independently.

Some advantages:

- Speed though autonomy: Decentralized business operations allow domain teams to respond quickly to changes and opportunities. Teams can develop and deploy data-driven solutions tailored to their specific needs without being bottlenecked by centralized control.

- Innovation and constant improvements: By enabling domain experts to manage their data, the solutions used can be adapted over time to the current realities. As data is used to change and improve things, we can expect this evolution to be an intrinsic necessity.

- Data as a Product: Treating data as a product encourages teams to focus on the quality, usability, and value of the data they generate and manage, which improves overall data quality and utility across the organization.

So who’s this builder - the Data Platform Engineer?

The shift from the classic, ad hoc data engineering to data platform building raises with it the technical requirements for the role. So who’s this new persona and how are they different from a classic data engineer?

Data Engineers traditionally focus on the collection, storage, and preliminary processing of data. Data Platform Engineers additionally need to have understanding of the systems they impact.

- architecture, at ingestion and after

- scalability for various types of pipelines with different sizes, workloads and execution patterns. Resilience, flexibility, scalability become primary concerns.

- infrastructure as code, understanding of how to enable other developers to access the right amount of infrastructure, keeping costs optimal. Good understanding of cloud services and infra.

- software development best practices, automated testing, CI/CD

- understanding of data lifecycle, implementation of governance around access, security, usage, compliance documentation, deprecation etc.

In other words, the data platform engineer’s main goal is to enable data access for other people, and such it logically follows that they need to consider the other person, what’s easy, feasible or hard for them to add new data sources, keep it governed and accessible.

The data platform engineer is not a replacement for data engineers.

Despite the advanced scope of the Data Platform Engineer, the need for Data Engineers remains. The two roles complement each other, with Data Engineers handling more of the operational aspects of data management and Data Platform Engineers focusing on the overarching system architecture and strategic integration.

This specialization within the data team allows for tackling more complex, large-scale projects efficiently and effectively, providing a robust framework for managing an organization's data from raw inputs to actionable insights.

By having this cooperation, the data engineer is empowered to leverage existing methods to achieve efficiency and quality in their work.

This shift fundamentally unlocks the ability to scale beyond the uphill struggle of maintaining ad hoc pipelines.

The drivers of progress

Data Platform Engineers are not just tech experts but senior strategists who deeply understand both the technology and the dynamics of their teams. The biggest challenge in a data team is usually not the technology, but the place where the rubber meets the road - understanding the psychology of the user.

Data platform engineers usually have this experience enabling them to be pragmatic. They navigate the unique challenges of team psychology, ensuring everyone is aligned and motivated to make data usage ready for production. With their hands firmly on the tech steering wheel and a keen eye on team well-being, they’re the unsung heroes shaping the future of their companies, one data solution at a time.

Call to action

dlt library is a central building block to data platforms. Data platform engineering is a complex role that requires understanding of governance, business and tech. If you need a hand with your data platform, dlthub team currently offers help with:

- Modernising your data platform

- Building your data platform

- Governance for your data platform

Read more about dlt for data platform teams! or book a call with our support engineer Violetta.