harness builds an end to end data platform with dlt + sqlmesh

Wheeler Boyd-Boffa, alongside the VP of Revenue operations and strategy, Hoppy Maffione, empowered the technical lead on the go-to-market side of the company, senior data engineer Alex Butler, to make technical decisions. Alex chose dlt + sqlmesh to create an end to end next generation data platform.

exec summary

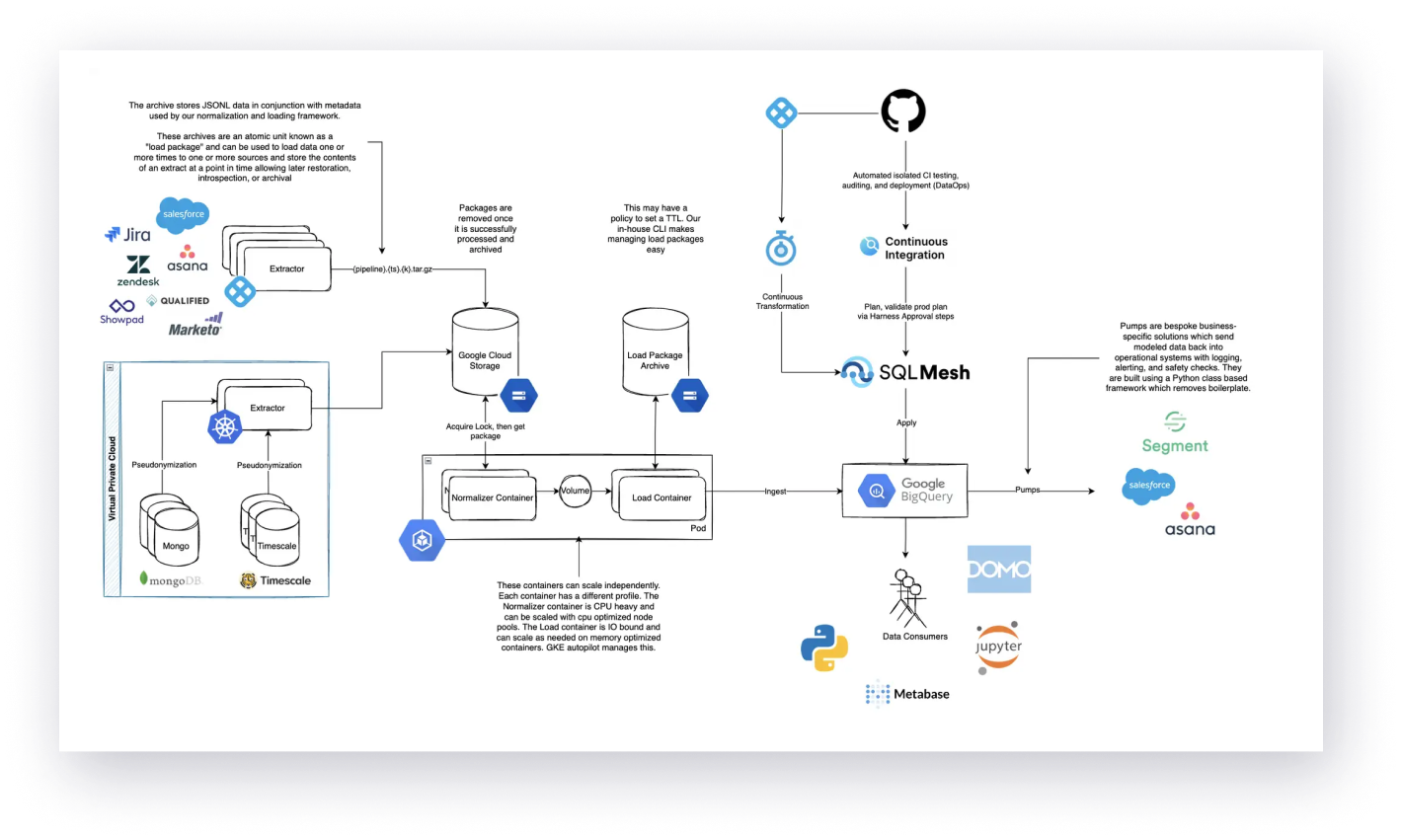

Harness relies on dlt to handle the extraction, normalisation and loading of data sources. Creating new data sources and adding them as data pipelines is handled with dlt. As of today there are 14 active sources and growing: 6 marketing & sales data sources (Salesforce, Zendesk, Marketo, Qualified, Showpad, people.ai); 4 tool sources (GitHub, Confluence, Jira, Asana); 2 production data sources (MongoDB, Timescale).

dlt also handles schema automation and evolution. dlt is integrated in the Harness UI. Internal stakeholders at Harness can control what resources of each source are enabled or disabled through the Harness UI. Thus these stakeholders, including product, business, and operation teams, can independently satisfy a majority of their data needs through self-service. The teams built multi-touch attribution for how Harness acquires customers, and models for how Harness customers utilize licenses. If the teams want to build anything else to push the company forward, they don't need to wait for permission or data access to do it. All kinds of new reporting is being done that wasn't possible before.

Once the data is in their data warehouse - Harness uses BigQuery - the company uses SQLMesh. SQLMesh provides data contracts between the data that is in the data warehouse and how it is being transformed downstream to data users. Because SQLMesh provides column level lineage the company can take a data model and then figure out for each column what the upstream columns are that feed into it.

Harness even went a step further and integrated dlt and sqlmesh to achieve interoperability between them. dlt is able to automatically update sqlmesh external models. At the same time Harness can also use the command sqlmesh plan that lets the company manage the evolving dlt schema. One of the future integration projects is to create an operational dashboard which assesses the health (data freshness, pipeline health) of individual data columns per model in a fully automated way.

the seeds of success

harness management empowers their senior data engineer Alex Butler to take technical decisions

Wheeler Boyd-Boffa, alongside the VP of Revenue operations and strategy, Hoppy Maffione, empowered the technical lead on the go-to-market side of the company, senior data engineer Alex Butler, to make technical decisions.

So I've built everything myself. That includes from the very beginning setting up infrastructure. Running these other previous solutions, setting up Singer, Meltano, these data pipelines, dbt. Migrating everything has been done myself, too. I don't really have to go to someone and ask as long as we are achieving business outcomes and driving strategy.

before dlt and sqlmesh

alex butler’s experience with singer, meltano and dbt

Before exploring dlt and SQLmesh Harness was trying to work with Singer, Meltano and dbt.

In Alex Butler’s eyes, the biggest problem with Singer is the fractured ecosystem of Taps and Targets. In his view, a lot more than half of these don’t work and most of them are not maintained. Developers have no control over them and their schema, what goes into Singer or how it comes out of Singer. Customisation of Taps and Targets for production use cases is difficult. As a result, at Harness, Alex Butler ended up either creating his own Taps and Targets from scratch or forking existing ones. He was writing most of the data pipeline code himself. To deal with Singer’s underlying common interchange format he was just writing the pipeline code in such a way that it would create a Singer format or read a Singer format. It was a lot of work, having a lot of separate repositories to manage the Singer interchange format. Another issue with Singer was dealing with the schema. When dealing with Singer Taps a developers still has to define the schemas. The schemas are JSON. Schemas are translated by the Targets to the appropriate Target schema. Alex specifically had issues with BigQuery, because with BigQuery you need strongly typed schemas and JSON schemas are not strongly typed.

Additionally Meltano would build virtual environments for all of the plugins that Alex needed. Meltano itself has heavy dependencies with a lot of pinned requirements. It wasn't easy to use Meltano alongside other software.

Meltano encourages you to put everything in another virtual environment. The result is that an utility goes here and this utility goes there. What ends up happening is that it's a lot harder to just use these tools without invoking them through Meltano. Everything suddenly becomes like a wrapper. You're doing this Meltano invoke, this little Meltano invoke this and it starts to feel like there's a lot of indirection. Certain integrations don't work. At the time Harness was using the extension dbt power user and it didn't work with Meltano.

With dbt the main issue was the complexity to get it to work the way that you wanted to work. Harness tried to be a model dbt citizen. The company had CI pipelines, had things that ran on pull requests, it would store the manifest.json artifact and things of that nature. Harness tried to avoid recomputation as much as possible. But for each of those things that were listed out it was more complexity for Alex Butler to set it up and maintain dbt. A particularly painful recurring problem included figuring out how to store an artifact in cloud storage, pulling it back and making sure nothing breaks. Another frequent problem was around rerunning giant incremental models.

hearing about an alternative

Alex found dlt through a buddy in his network. At that time this person was into DuckDB and was creating his own version of a modern data stack in a box. His buddy had heard about dlt from a blog post and had shared the dlt repository.

I chose dlt because I immediately saw the value in its core proposition when I looked into the dlt GitHub repo: in dlt the premise is that just yield Python dictionaries or Python objects. And then dlt handles everything else from the extraction, normalization, loading etc. This addresses the most manual, error prone process of a data engineer's job is two-fold: It's managing evolving schemas and loading data to a destination consistently from some in-memory data structures (lists, dicts).

moving away from custom singer taps and targets and meltano’s heavy dependencies

When Alex found dlt he immediately saw that dlt can solve his problems with Singer schemas. He would not even need the Singer schema message, he’ll just give dlt the data.

At that time Alex had already built his own Singer runner Alto. His initial test was to take an Asana Singer Tap, pipe that into dlt and check what happens. He discovered that he is able to run Singer Taps as dlt pipelines.

testing and implementing dlt across the company

So as a first implementation step he ran Singer Taps as dlt pipelines.

However, he also found that he is still dealing with two Singer constraints from before. Even though dlt can consume the adjacent messages and work, if he wanted to make a change to the top, he now had to go into the separate repository, or get a submodule that he made separate commits in. Then he had to download the updated commit to his current mono repo repository. So it was just a lot of indirection to create an update. Furthermore, he was constraining himself by forcing himself into the Singer framework.

So the next step was replacing one Singer Tap at a time. Alex did Salesforce first. He translated the whole Salesforce Tap to just function generators with the dlt wrapper. He was able to reuse the Singer Taps that he had built before. But it was just so much simpler, everything became a single file for each pipeline. Most of the pipelines became only a few lines. He completely migrated one Singer Tap after another.

Once there he had enough of those, he wondered what he could do next. What can he do to put this in the hands of the team? How can he wrap this? So as the third step he wrapped dlt in a custom object model to create a company wide registry of sources and other metadata.

The effect of this custom object model was that he was able to create a custom CLI wrapper for dlt using dlt as a library. It really simplified sort of how Harness thinks about data pipelines, how Harness runs data pipelines and how the company tests them.

No longer the company needs to have all of these separate virtual environments with different Taps and Targets and try to run them together. Instead he is able to take a single CLI tool which they call CDF (this stands for Continuous Data Flow - a play on continuous integration and continuous delivery). It registers all of Harness sources into a single interface. So all he needs to do is CDF ingest and he can run any source that was implemented in one UI.

An illustration: let’s assume Alex needs to make sure that a specific source is working or he needs to implement a new endpoint. All he needs to do is to modify the code and just run CDF ingest my source to DuckDB. And if needs to go to a different source, he can go do a different source. Now he has a single pane with the entire data pipeline process and that made iterating really fast.

The fact that these things are Python generators means he can just pull a hundred records. So in CI he has a test flag and he can use that with any of his resources. He moves a lot faster than with Singer.

dlt has enabled me to completely rewrite all of our core SaaS service pipelines in 2 weeks and have data pipelines in production with full confidence they will never break due to changing schemas. Furthermore, we completely removed reliance on disparate external, unreliable Singer components whilst maintaining a very light code footprint.

integrating dlt into Harness to achieve no-code data democracy

Harness as a software delivery platform, a CI/CD platform provides a very good foundation for DataOps itself. Because of that Harness looked for ways how to integrate dlt into Harness itself.

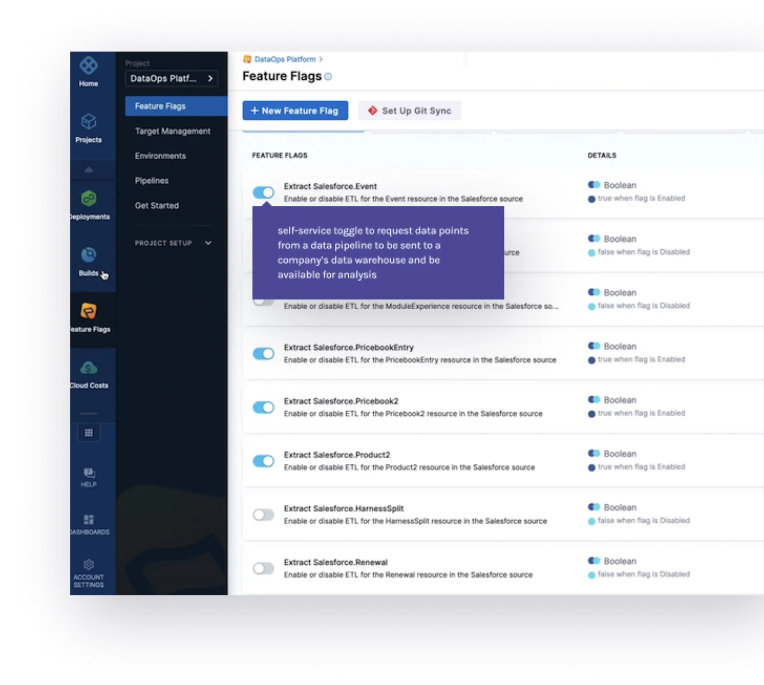

Harness has a feature flag interface that is embedded into the Harness code when someone uses the Harness SDK. This interface checks the feature flags. Harness used that and incorporated it into dlt's resources. So whenever a Harness developer creates a resource that is registered through Harness’ wrapper and it automatically creates feature flags via an API in this UI.

Technical stakeholders can control what resources of a sources such as Salesforce, Jira or MongoDB are enabled or disabled through the UI. Technical stakeholders who need data in the data warehouse, or don't want certain data in the data warehouse go to this interface: They can toggle Harness data pipeline on and off. These stakeholders don’t need to touch code to enable or disable sources.

The whole setup runs on Harness CI. The stakeholders log in via Harness, thus relying on Harness’ existing governance. Jobs run every 30 minutes or every hour, depending on the source. Stakeholders can also send data back to Salesforce, Asana, and Segment.

Data democracy for our product, business, and operation teams means that they can independently satisfy a majority of their data needs through no-code self-service. The teams built multi-touch attribution for how Harness acquires customers, and models for how Harness customers utilize licenses. If the teams want to build anything else to push the company forward, they don't need to wait for permission or data access to do it. All kinds of new reporting is being done that wasn't possible before.` Data hygiene is another benefit of our data platform. We can now easily find data quality issues in the source system, and solving them is automated. We have business rules in place that allow us to escalate data issues to the appropriate team to fix data in the source system quickly.

moving away from the complexity of dbt

Alex was already using dlt by the time he had found sqlmesh. His migration from dbt to sqlmesh was a concurrent one.

He had one directory where he had a dbt project and another directory where he scaffolded out a sqlmesh project. He assessed the complexity to translate models from dbt to sqlmesh and it was pretty low. And then he would start to migrate it to sqlmesh. He eventually reworked all of the Harness dbt models that were on top of data sources. It was a lot of work, but it was worth it.

the start of a new collaboration of a new data team

For him integrating sqlmesh was just integrating another CLI command. Harness has CDF ingest and CDF transform and everything's in Python.

Once Harness was all in on sqlmesh Alex started think about integrations between dlt and sqlmesh.

As the initial integration point he looked at concept of **sqlmesh's concept of external models.** sqlmesh additionally gives Harness end-to-end column level lineage. So Harness declares all the columns that are in a source table, similar to how dlt declares all the columns that are associated with the resource and schema.

Harness can declare a table and then a column and a column type. And then it can let sqlmesh know the external tables available. It translates the column type to the appropriate database. So if there’s a resource with these many columns, then it translates a table with these columns to types.

interoperability between dlt and sqlmesh

And now Alex had interoperability. dlt is able to automatically update those external models. At the same time Alex can also use the command sqlmesh plan that lets him manage the evolving dlt schema.

An example: let’s assume there’s new columns in a data contract and Harness needs to make sure that nothing breaks downstream. He can use sqlmesh plan and it's going to factor dlt in. He needs to run these models into an internal schema. He needs to audit them and only then he will promote the environment if all of those things work in succession. If users of a specific report based on a specific dlt Salesforce resource are impacted, then he will know. Sqlmesh handles data contracts automatically and can do safe and intelligent consolidation and backfilling.

Another integration point turned out to be the company wide registry of sources and other metadata provided by dlt. What if this metadata would be passed to sqlmesh?

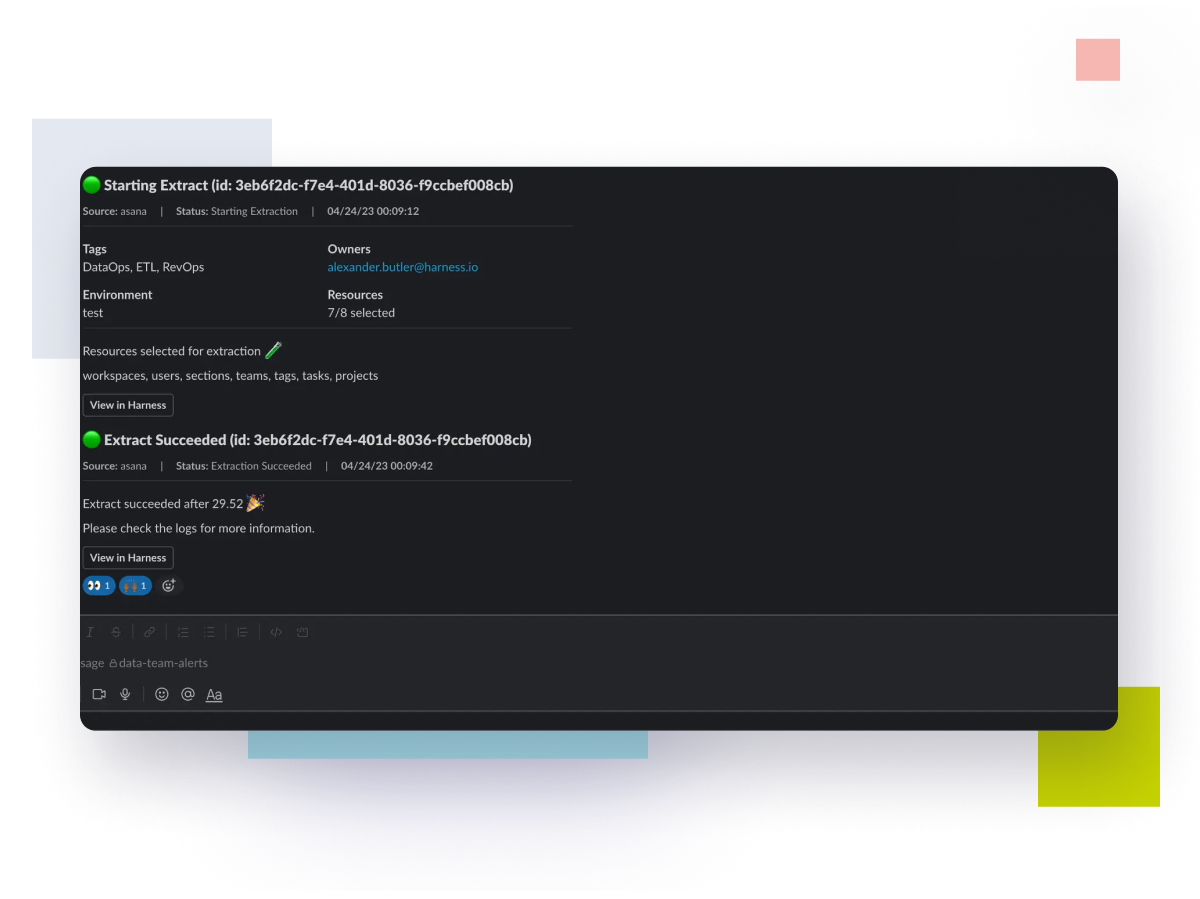

The company has a lot of Slack messages that are generated by their data platform where metadata from both dlt sqlmesh is displayed.

When an extraction for specific resources succeeds the DataOps team gets a Slack message. The resources selected, those are dlt resources.

Similar for sqlmesh. When a sqlmesh plan succeeds, then the DataOps team gets a notification in real time as well.

With dlt + sqlmesh it is also very easy for Harness to switch test, development and production destinations. It is easy because sqlmesh transpiles your SQL to run on any dialect.

Harness can write data to DuckDB with dlt and then use sqlmesh to run transformations on it. It is the same when they write data to BigQuery - without change to the models.

looking ahead

One of the upcoming projects for Alex is to create an operational dashboard which assesses the health (data freshness, pipeline health) of individual columns per model in a fully automated way.

Sqlmesh uses sqlglot under the hood, hence developers get column level lineage right when they import sqlmesh and they have the Python object in memory. So Alex concluded that he can take a model and then figure out for each column what the upstream columns are that feed into it.

Alex thinks that from a data engineering perspective a lot of products that are being sold today are trying to sell him on the idea of one is column level lineage and two is observability.

dlt has the concept of a load package, which loads data atomically, and dlt also has metadata around that load package. So you could say this load package loaded and affected these resources at this time with this much data.

Alex thinks it would be very interesting to connect dlt metadata to sqlmesh metadata in such a way that he has an operational dashboard that passes the following scenario:

- a stakeholder talks to Alex and they're like: “Hey something's going on with this dashboard that we use every day.”

- Alex then goes to this operational dashboard and is able to answer: “Okay these load packages loaded and…this table is fresh because these columns came from these exact packages that succeeded.”

Alex thinks there's a lot of interesting dlt + sqlmesh metadata for an end to end data platform. He is excited to build it.